An overview of this chapter’s contents and take-aways can be found here.

The final chapter of this script gives a brief introduction into the realm of econometrics. Put simply, econometrics can be described as the study of methods that allow statistical analysis of economic issues. To understand what we do in econometrics/statistics, we need to introduce some concepts and results from probability theory.

Table of Contents

Definition: Probability Space.

A probability space ![]() is a triple

is a triple ![]() , where

, where

Probability spaces are best illustrated with a simple example: consider the case of rolling a regular dice. Before the dice is rolled, the set of possible outcomes is ![]() . Examples of events that could occur are a specific number being rolled, e.g.

. Examples of events that could occur are a specific number being rolled, e.g. ![]() or that an even number is rolled,

or that an even number is rolled, ![]() . Generally, any combination of elements in

. Generally, any combination of elements in ![]() may constitute an event.

may constitute an event.

It remains to define the probability measure ![]() that assigns events

that assigns events ![]() a probability between 0 and 1. Clearly, the measure should assign

a probability between 0 and 1. Clearly, the measure should assign ![]() , the event that any number between 1 and 6 is rolled, a probability of 1 and conversely, not turning out a number between 1 and 6 should have zero probability. Lastly, thinking about the probability that either a 1 or a 2 is thrown with a fair dice, it is intuitively clear that the probabilities of both events should add up. These three requirements are in fact sufficient to define a probability measure:

, the event that any number between 1 and 6 is rolled, a probability of 1 and conversely, not turning out a number between 1 and 6 should have zero probability. Lastly, thinking about the probability that either a 1 or a 2 is thrown with a fair dice, it is intuitively clear that the probabilities of both events should add up. These three requirements are in fact sufficient to define a probability measure:

Definition: Probability Measure.

Consider a sample space ![]() and an event space

and an event space ![]() . Then, a function

. Then, a function ![]() is called a probability measure on

is called a probability measure on ![]() if

if

For the second property, the focus on disjoint sets is crucial: if some elements ![]() would realize both

would realize both ![]() and

and ![]() , for instance modifying

, for instance modifying ![]() , then additivity need not be assumed. Note that by the second property, it is usually sufficient to know the probability of events referring to individual elements of

, then additivity need not be assumed. Note that by the second property, it is usually sufficient to know the probability of events referring to individual elements of ![]() , as any event

, as any event ![]() can be decomposed into a disjoint union of such events:

can be decomposed into a disjoint union of such events: ![]() ,

, ![]() . For notational simplicity, we define

. For notational simplicity, we define ![]() for

for ![]() .

.

As an exercise, try to define the probability measure that characterizes rolling a rigged dice for which it is twice as likely to roll a 6 than it is to roll a 1, but all other numbers remain as likely as with a fair dice.

To introduce conditional probabilities intuitively, consider again the example of the regular dice. What is the probability of throwing a 2, given that you already know that the outcome is an even number? Intuitively, it is clear that we now must compare the probability of a 2 against the probability of the subset of even numbers instead of the whole sample space. We formalize this intuition as follows:

Definition: Conditional Probability.

Consider a sample space ![]() and an event space

and an event space ![]() and two events

and two events ![]() with

with ![]() . The conditional probability of

. The conditional probability of ![]() given

given ![]() is defined as:

is defined as:

![]()

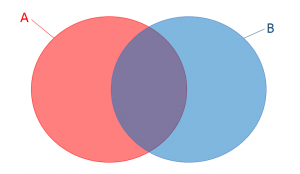

For the following considerations, the picture below provides helpful intuition. The probability of the union of the two non-disjoint events A and B is given by

![]()

The following result will be very helpful when working with conditional probabilities:

Theorem: Bayes’ Rule.

Consider disjoint sets ![]() where

where ![]() and

and ![]() and

and ![]() . For all

. For all ![]()

![]()

Definition: Independence.

Two events ![]() with are set to be stochastically independent if:

with are set to be stochastically independent if:

![]()

For all ![]() with

with ![]() , independence implies that

, independence implies that ![]() . As an example for two independent events in our dice example, consider the event of throwing a number strictly smaller than 3, and the event of throwing an odd number. The probability of throwing an odd number is

. As an example for two independent events in our dice example, consider the event of throwing a number strictly smaller than 3, and the event of throwing an odd number. The probability of throwing an odd number is ![]() , and and the conditional probability of throwing an odd number given that the number is strictly smaller than 2 is also

, and and the conditional probability of throwing an odd number given that the number is strictly smaller than 2 is also ![]() .

.

In most practical scenarios, we care less about the realized events themselves, but rather about (numeric) outcomes that they imply. For instance, when you plan on going to the beach, you do not want to know all the meteorological conditions ![]() in the space of possible conditions, but you care about the functions

in the space of possible conditions, but you care about the functions ![]() and about

and about ![]() that measures the temperature in degrees Celsius implied by the conditions

that measures the temperature in degrees Celsius implied by the conditions ![]() . In other words, you care about two random variables: variables

. In other words, you care about two random variables: variables ![]() on the real line that are determined by (possibly much richer) outcomes in the event set

on the real line that are determined by (possibly much richer) outcomes in the event set ![]() of a probability space.

of a probability space.

Definition: Random Variable, Random Vector.

Consider a probability space ![]() . Then, a function

. Then, a function ![]() is called a random variable, and a vector

is called a random variable, and a vector ![]() where

where ![]() ,

, ![]() are random variables, is called a random vector.

are random variables, is called a random vector.

This definition gives a broad definition of the random variable concept. Those with a stronger background in statistics or econometrics may excuse that it is very vague and simplistic; the concept is mathematically not straightforward to define, and for our purposes, it suffices to focus on the characteristics stated in the definition.

Continuing the example of the fair dice roll, let’s consider a game where I give you 100 Euros if you roll a 6, but you get nothing otherwise. Can you define the function ![]() that describes your profit from this game, assuming for now I let you play for free?

that describes your profit from this game, assuming for now I let you play for free?

A key concept related to random variables ![]() is their distribution, i.e. the description of probabilities characterizing the possible realizations of

is their distribution, i.e. the description of probabilities characterizing the possible realizations of ![]() . Our leading example of the dice roll, and the game where you can win 100 Euros, is an example of a discrete random variable, characterized by a discrete probability distribution. For such random variables, there are a finite number of realizations (in our case: two, either 0 Euros or 100 Euros), and every realization may occur with a strictly positive probability (5/6 vs. 1/6).

. Our leading example of the dice roll, and the game where you can win 100 Euros, is an example of a discrete random variable, characterized by a discrete probability distribution. For such random variables, there are a finite number of realizations (in our case: two, either 0 Euros or 100 Euros), and every realization may occur with a strictly positive probability (5/6 vs. 1/6).

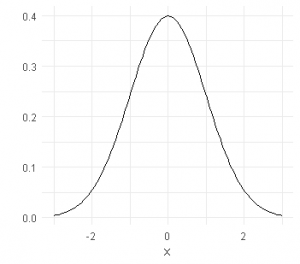

The other case is a continuous probability distribution, with an infinite number of realizations that can not be attributed positive probability: ![]() for any

for any ![]() . Probably the most famous example of a continuous distribution is the normal distribution. As an example consider the average weight of a package of cornflakes. The machine filling the packages aims for a certain weight, but getting a package with exactly this weight has probability zero. If you have a very large number of cornflakes packages and stack packages with weights in a given interval, ordering these intervals from the smallest to the largest, you will get a curve that looks somewhat like a discretized version of the curve below. We call this curve density, and the integral of the density the distribution of the random variable. The density of a normal random variable is given as

. Probably the most famous example of a continuous distribution is the normal distribution. As an example consider the average weight of a package of cornflakes. The machine filling the packages aims for a certain weight, but getting a package with exactly this weight has probability zero. If you have a very large number of cornflakes packages and stack packages with weights in a given interval, ordering these intervals from the smallest to the largest, you will get a curve that looks somewhat like a discretized version of the curve below. We call this curve density, and the integral of the density the distribution of the random variable. The density of a normal random variable is given as ![]() . Below, you can see a plot of a normal random variable with mean

. Below, you can see a plot of a normal random variable with mean ![]() and standard deviation

and standard deviation ![]() . We call this a standard normal random variable.

. We call this a standard normal random variable.

Often, we characterize the distribution with it’s cumulative distribution function as ![]() , in our case

, in our case ![]() .

.

The probability density function we have seen above is the derivative of this function: ![]() .

.

Using the distribution of a random variable, we can now define its moments: the so-called p-th moment of the distribution is given as ![]() . The first moment of a distribution is called it’s expected value.

. The first moment of a distribution is called it’s expected value.

Definition: Expected Value.

Consider a probability space ![]() and a random variable

and a random variable ![]() on this space, with probability density function

on this space, with probability density function ![]() . Then, the expected value

. Then, the expected value ![]() of

of ![]() is defined as the following integral

is defined as the following integral

![]()

and for a transformation ![]() of

of ![]() ,

,

![]()

Definition: Variance, Standard Deviation.

Consider a probability space ![]() and a random variable

and a random variable ![]() on this space. Then, the variance of

on this space. Then, the variance of ![]() is defined as

is defined as ![]() , and its square root is called the standard deviation of

, and its square root is called the standard deviation of ![]() , denoted

, denoted ![]() .

.

The expected value for discrete distributions, when ![]() is the set of values that

is the set of values that ![]() can take with positive probability, simplifies to

can take with positive probability, simplifies to ![]() . This shows the intuition: you expect the value

. This shows the intuition: you expect the value ![]() with probability

with probability ![]() , and you expectation is then simply the sum of all these terms.

, and you expectation is then simply the sum of all these terms.

All concepts considered so far could be developed on the basis of univariate random variables, but work similarly for random vectors, i.e., multiple random variables. Next, we are going to introduce concepts for more than one random variable.

Definition: Covariance, Correlation.

![]()

![]()

If ![]() , X and Y are called uncorrelated. To further characterize how X and Y behave jointly, we can define their joint distribution. For brevity, we are again confining ourselves to the special cases of jointly discrete and jointly continuous random variables.

, X and Y are called uncorrelated. To further characterize how X and Y behave jointly, we can define their joint distribution. For brevity, we are again confining ourselves to the special cases of jointly discrete and jointly continuous random variables.

Definition: Joint Distribution.

![]()

![Rendered by QuickLaTeX.com \[\sum_{k,l = 1}^{\infty}P(X = x_k,Y = y_l)\mathbf{1}(X = x_k,Y = y_l)\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-0b3c94e65ba8f1c73a99fec54e1b4f2b_l3.png)

As a sidenote: defining the joint distribution as a function mapping from ![]() to

to ![]() is not formally correct. Instead of

is not formally correct. Instead of ![]() , the domain of the pobability measure is the Borel-set over

, the domain of the pobability measure is the Borel-set over ![]() . The wrong “definition” above was chosen to make the definition of marginal distributions more intuitive.

. The wrong “definition” above was chosen to make the definition of marginal distributions more intuitive.

Definition: Marginal Distribution, Marginal Density.

Given two jointly continously distributed random variables ![]() and

and ![]() , the marginal distribution of X is defined as

, the marginal distribution of X is defined as

![]()

where ![]() is called the marginal density of

is called the marginal density of ![]() .

.

Going from joint to marginal densities is pretty simple, as you have seen in the definition above: one “integrates out” the random variable that one is not interested in. Remeber that given Fubini’s Theorem, the order of integration does not matter (at least for most cases we consider in economics). Thus, we can get marginal distributions for both ![]() and

and ![]() .

.

We can now translate the concept of independence to random variables:

Definition: Independence of Random Variables.

Two random variables ![]() and

and ![]() are independent if, for any two sets of real numbers

are independent if, for any two sets of real numbers ![]() and

and ![]()

![]()

In other words, X and Y are indepented if, for all A and B, the events ![]() and

and ![]() are independent.

are independent.

The independence of two random variables has important implications for their joint cumulative distribution function:

![]()

which has the following implication for the joint density of jointly discrete random variables:

![]()

and for jointly continuous random variables

![]()

In words, two random variables X and Y are independent if knowing the value of one does not change the distribuion of the other. Their joint density is thus simply the product of the marginal densities. This has important implications, some of which affect the way we can work with expectations, variances and covariances:

Theorem: Rules for Expected Values, Variances and Covariances.

Let ![]() be random variables,

be random variables, ![]() be scalars,

be scalars, ![]() functions, then:

functions, then:

Expected Values:

Variances:

Covariances:

As an exercise, try to prove the rules for variances and covariances using the rules established for expected values.

If you feel like testing your understanding of the discussion thus far, you can take a short quiz found here.

Having introduced joint and marginal distributions of random variables, we now introduce the conditional expectation function. We first focus on the simpler case of two jointly discrete random variables. We can write the probability mass function for X given ![]() , given

, given ![]() as

as

![]()

For a given value of Y, we can thus define ![]() for all values y such that

for all values y such that ![]() as

as

![]()

In this course, we will leave out the more technical definitions of conditional expectations and simply note that for jointly continuous random variables, things work similarly. The twist is that the probability mass function of ![]() will be zero for any value y for a continuous random variable. Thus, we must work with joint and marginal density functions instead, to define the conditional density as:

will be zero for any value y for a continuous random variable. Thus, we must work with joint and marginal density functions instead, to define the conditional density as:

![]()

and the conditional expectation for ![]() given

given ![]() with

with ![]() as

as

![]()

Typically, we are interested in the conditional expectation ![]() for the whole distribution of

for the whole distribution of ![]() , and not just at a single value

, and not just at a single value ![]() . To characterize the conditional expectation for all values

. To characterize the conditional expectation for all values ![]() for which

for which ![]() , we consider the conditional expectation as a function mapping from the support of Y to the

, we consider the conditional expectation as a function mapping from the support of Y to the ![]() :

:

Definition: Conditional Expectation.

The conditional expectation function ![]() is a function g with

is a function g with

![]()

This definition is imprecise, but the formal definition of the conditional expectation function is unintuitive, and thus left out of this course.

In econometrics, you will work a lot with conditional expectations, so it is useful to familiarize ourselves with some rules and properties:

Theorem: Rules for Conditional Expectations.

Let ![]() be random variables,

be random variables, ![]() be scalars,

be scalars, ![]() a function then:

a function then:

Before moving on to two central results, we need to define some convergence concepts for random variables:

Definition: Convergence in Probabiltiy.

A series of random variables ![]() converges in probability to some random variable

converges in probability to some random variable ![]() , if

, if ![]() :

:

![]()

Equivalently, we write ![]() or

or ![]() .

.

Definition: Convergence in Distribution.

A series of random variables ![]() converges in distribution to some random variable

converges in distribution to some random variable ![]() , if and only if for the cumulative distribution functions, the following holds

, if and only if for the cumulative distribution functions, the following holds

![]()

for all ![]() at which

at which ![]() is continuous. Equivalently, we write

is continuous. Equivalently, we write ![]() .

.

To put it more simply, ![]() converges to

converges to ![]() in probability if for large

in probability if for large ![]() ,

, ![]() and

and ![]() are as good as equal (deviation smaller than an arbitrarily small

are as good as equal (deviation smaller than an arbitrarily small ![]() ) with probability 1. The fairly abstract definition of convergence in distribution has a relatively simple interpretation: the sequence of distribution functions

) with probability 1. The fairly abstract definition of convergence in distribution has a relatively simple interpretation: the sequence of distribution functions ![]() associated with the sequence

associated with the sequence ![]() of random variables approaches the distribution function

of random variables approaches the distribution function ![]() of

of ![]() pointwise, i.e. at any given location

pointwise, i.e. at any given location ![]() (this criterion is indeed weaker than the one of uniform convergence, where we would require convergence across locations

(this criterion is indeed weaker than the one of uniform convergence, where we would require convergence across locations ![]() ). In simpler words, if

). In simpler words, if ![]() is large enough, then the distribution of

is large enough, then the distribution of ![]() should be as good as identical to the one of

should be as good as identical to the one of ![]() . Note that in distinction to convergence in probability, specific realizations of

. Note that in distinction to convergence in probability, specific realizations of ![]() can still be very different from those of

can still be very different from those of ![]() , but their probability distributions will coincide. Therefore, this concept is weaker than convergence in probability.

, but their probability distributions will coincide. Therefore, this concept is weaker than convergence in probability.

Using these convergence concepts, we can now introduce the Weak Law of Large Numbers and the Central Limit Theorem:

Theorem: Weak law of large numbers.

Consider a set ![]() of

of ![]() independently and identically distributed variables with

independently and identically distributed variables with ![]() . Then,

. Then,

![]()

In words,the WLLN tells us that the average of independent random variables that have the same distribution will converge to the expected value of the distribution. This result moves us from theoretical considerations into the world of economics! Suppose we have a large but finite sample of observations of, e.g., household incomes. Using the law of large numbers, we now have an estimator for the expected value of the household income in our population that we can infer from an incomplete subset of this population. The next result is even more powerful:

Theorem: Central limit theorem.

Consider a set ![]() of

of ![]() independently and identically distributed variables with

independently and identically distributed variables with ![]() . Then, for

. Then, for ![]() , it holds that

, it holds that

![]()

In words, we know that the average of iid random variables is a random variable itself, and we know that this random variable converges in distribution to a normal random variable with the same expected value as each random variable in the sequence ![]() and a variance that is equal to the variance of the sequence divided by

and a variance that is equal to the variance of the sequence divided by ![]() , the size of the sample. This result is very general and holds for sequences of random variables almost regardless of their distribution. This result explains the prominence of normal distributions in statistics and will be invaluable for econometrics. While the sample average gives you a good prediction for the expected value of a random variable

, the size of the sample. This result is very general and holds for sequences of random variables almost regardless of their distribution. This result explains the prominence of normal distributions in statistics and will be invaluable for econometrics. While the sample average gives you a good prediction for the expected value of a random variable ![]() , it is silent on how good this prediction is. To assess how precise this prediction is, we can apply the CLT and use the variance to measure the degree of expected deviation of the mean from the expected value. As the variance is based on a square term, we usually re-normalize it by taking the square root when talking about deviations from the expected value, which we call the standard deviation

, it is silent on how good this prediction is. To assess how precise this prediction is, we can apply the CLT and use the variance to measure the degree of expected deviation of the mean from the expected value. As the variance is based on a square term, we usually re-normalize it by taking the square root when talking about deviations from the expected value, which we call the standard deviation ![]() .

.

You may test your understanding of the concepts thus far by answering two questions: First, if you were neutral to risk (i.e., you only care about your expected profit), would you play the game where you can win 100 Euros if you roll a 6 if you needed to buy in for 15 Euros (also think about whether you would spontaneously agree to this game without doing the math)? And second, what game do you prefer in terms of expectations, the one where you get a 100 Euros for a 6, or the one where you get ![]() Euros, and

Euros, and ![]() is drawn randomly from

is drawn randomly from ![]() ? And which of these games has the more certain/less volatile outcome? For the latter game, you may use that the dice role is independent of the randomly drawn number

? And which of these games has the more certain/less volatile outcome? For the latter game, you may use that the dice role is independent of the randomly drawn number ![]() , so that

, so that ![]() for any

for any ![]() . (If you get stuck, feel free to open and read through the solution.)

. (If you get stuck, feel free to open and read through the solution.)

Having laid the foundation of stochastic concepts, this final section proceeds to discuss Ordinary Least Squares Regressions (OLS) as the most basic econometric model used in practice. OLS models aim to do the first step from correlations to causality by allowing to “control”/”hold constant” third confounding variables.

Suppose we set out to come up with a model that allows to assess how a random variable ![]() affects another random variable

affects another random variable ![]() . Suppose that we want to use this model to make predictions about Y in the future, where we do not know the realizations of Y, but we do know realizations of X. Given our prior knowledge about X, we can use the conditional expectation function to re-write Y as

. Suppose that we want to use this model to make predictions about Y in the future, where we do not know the realizations of Y, but we do know realizations of X. Given our prior knowledge about X, we can use the conditional expectation function to re-write Y as

![]()

where ![]() is the difference between the realized values of Y and its conditional expectation given X. We know that generally,

is the difference between the realized values of Y and its conditional expectation given X. We know that generally, ![]() is an unknown function

is an unknown function ![]() . Moving forward, we can assume that the conditional expectation is linear. If you are unwilling to assume that the CEF is linear, note that by Taylor’s theorem we can approximate the function

. Moving forward, we can assume that the conditional expectation is linear. If you are unwilling to assume that the CEF is linear, note that by Taylor’s theorem we can approximate the function ![]() using polynomial functions of order p. The simplest such approximation is a linear function, i.e. a polynomial of order 1. This gives another justification for a linear specification of

using polynomial functions of order p. The simplest such approximation is a linear function, i.e. a polynomial of order 1. This gives another justification for a linear specification of ![]() . Under this specification, the equation becomes

. Under this specification, the equation becomes

![]()

In this model, if we observe that ![]() changes by

changes by ![]() units, we expect

units, we expect ![]() to change by

to change by ![]() units. Note that this does not assert anything about causality: a non-zero coefficient vector

units. Note that this does not assert anything about causality: a non-zero coefficient vector ![]() simply tells us that

simply tells us that ![]() is useful in predicting

is useful in predicting ![]() . To move closer to a causal interpretation, let us consider an additional random vector

. To move closer to a causal interpretation, let us consider an additional random vector ![]() of length

of length ![]() that may describe an indirect relationship between

that may describe an indirect relationship between ![]() and

and ![]() . Similar to before, we can write

. Similar to before, we can write

![]()

Then, ![]() measures the predictive potential of

measures the predictive potential of ![]() for

for ![]() conditional on

conditional on ![]() , i.e. holding constant

, i.e. holding constant ![]() . This is what we call the ceteris paribus assumption: we hold all other important factors constant and measure the marginal effect of

. This is what we call the ceteris paribus assumption: we hold all other important factors constant and measure the marginal effect of ![]() on

on ![]() . To see that

. To see that ![]() measures the marginal effect of

measures the marginal effect of ![]() on

on ![]() , consider the partial derivative

, consider the partial derivative

![]()

By the linear nature of the model, the levels at which these variables are held constant do not affect the marginal effect of ![]() on

on ![]() .

.

Let us proceed with ![]() by further characterizing X as a random matrix of the following form:

by further characterizing X as a random matrix of the following form:

![Rendered by QuickLaTeX.com \[ \mathbf X = \begin{pmatrix} 1 & x_{11} & \hdots & x_{1n} \\ 1 & x_{21} & \hdots & x_{2n}\\ \vdots & \vdots \\ 1 & x_{k1} & \hdots & x_{kn} \end{pmatrix} \]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-7cb34e7a1e9fe88d67a4199713baeeb2_l3.png)

X is the data matrix for the equation’s right hand side (RHS) that stacks the observations of the row vector ![]() of covariates

of covariates ![]() for each individual

for each individual ![]() in its rows . To make sure that you understand the structure of this matrix, think about how it would look like if you had the a simple model with

in its rows . To make sure that you understand the structure of this matrix, think about how it would look like if you had the a simple model with ![]() , i.e. only one control variable

, i.e. only one control variable ![]() .

.

We can describe the relationship of interest as

(1) ![]()

Now that we have an explicit formula that we intend to use for predicting Y, the question how to best estimate that formula arises. As per the economist’s preference for the Euclidean space (recall that the Euclidean norm captures the direct distance), it seems natural to minimize the distance of the vectors ![]() of data points for

of data points for ![]() and

and ![]() .

.

We express the optimization problem minimizing the euclidean distance between ![]() and the (approximation to the) conditional expectation function given

and the (approximation to the) conditional expectation function given ![]() as:

as:

![Rendered by QuickLaTeX.com \[ \min_{b\in \mathbb{R}^2} \| y - \mathbf{X} b \|_2 \hspace{0.5cm}\\ \text{or}\hspace{0.5cm} \min_{b\in \mathbb{R}^2}\sqrt{\sum_{i=1}^n (y_i - b_0 - b_1 x_{i1}- \hdots - b_k x_{ik})^2 } \]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-ace00def1a12bbb36557d36f8ada685b_l3.png)

Solving this should be a relatively simple task: there are no constraints, and the objective function entails a polynomial of the solution. Note that the square root as a monotonic transformation does not matter for the location of the extrema. Thus, we can solve the equivalent problem

(2) ![]()

This formulation gives the most common estimators of the linear model their name: least-squares estimators. Note that

![]() is the error in predicting

is the error in predicting ![]() using

using ![]() , which we also call the residual. Therefore, the solution

, which we also call the residual. Therefore, the solution ![]() , provided that it exists, minimizes the residual sum of squares

, provided that it exists, minimizes the residual sum of squares ![]() , i.e. the sum of squared prediction errors.

, i.e. the sum of squared prediction errors.

We are now set up to derive the (hopefully unique) solution for the estimator ![]() . To test your understanding of Chapter 4, you can try to derive it yourself before reading on. To help you get started, things remain more tractable if you stick with the vector notation, and start from the problem

. To test your understanding of Chapter 4, you can try to derive it yourself before reading on. To help you get started, things remain more tractable if you stick with the vector notation, and start from the problem

![]()

If you have tried to solve it, or you want to read on directly, let’s get to the solution of this problem. The first step is to multiply out the objective. Doing so, the problem becomes

![]()

Taking the following rules for derivatives of vectors and matrices as given, we know that

![]()

Putting these together, the first order condition is

![]()

so that the solution ![]() must satisfy

must satisfy

![]()

While it looks like we are almost there, there are two things that we need to address: (i) can we invert ![]() to obtain a unique solution? (ii) If we can, does this solution constitute a (strict) local minimum? (And is this local minimum global?)

to obtain a unique solution? (ii) If we can, does this solution constitute a (strict) local minimum? (And is this local minimum global?)

We know that matrices of the form ![]() for

for ![]() are always positive semi-definite: for

are always positive semi-definite: for ![]() ,

, ![]() for

for ![]() . Now, recalling Chapter 2, this matrix is indeed positive definite if for any

. Now, recalling Chapter 2, this matrix is indeed positive definite if for any ![]() ,

, ![]() , which is the case if

, which is the case if ![]() has full column rank, i.e. no column of

has full column rank, i.e. no column of ![]() can be written as a linear combination of the remaining columns. If the matrix

can be written as a linear combination of the remaining columns. If the matrix ![]() is positive definite, it is invertible.

is positive definite, it is invertible.

In models with many RHS variables, the issue of collinearity can be difficult to spot. If you have vectors ![]() and

and ![]() of observations for random variables

of observations for random variables ![]() and

and ![]() , respectively, then collinearity occurs if

, respectively, then collinearity occurs if ![]() for some

for some ![]() . In practice, statistical software will usually give you an error message if this condition is violated in your data.

. In practice, statistical software will usually give you an error message if this condition is violated in your data.

Returning to the optimization problem, if the matrix ![]() is invertible, then the unique solution is

is invertible, then the unique solution is

![]()

and it constitutes a strict local minimum by positive definiteness of ![]() , the second derivative of the objective function. This local solution is also global, as for any

, the second derivative of the objective function. This local solution is also global, as for any ![]() ,

, ![]() , so that the objective diverges to

, so that the objective diverges to ![]() in any asymptotic direction.

in any asymptotic direction.

This solution ![]() , called the ordinary least squares (OLS) estimator, can be shown to have very strong theoretical properties: it is unbiased, i.e.

, called the ordinary least squares (OLS) estimator, can be shown to have very strong theoretical properties: it is unbiased, i.e. ![]() , meaning that it is expected to estimate the true parameter

, meaning that it is expected to estimate the true parameter ![]() , and within the class of all estimators that are unbiased, it has the lowest variance, i.e. it is expected to provide the estimate closest to

, and within the class of all estimators that are unbiased, it has the lowest variance, i.e. it is expected to provide the estimate closest to ![]() for any given sample. This estimator always estimates the coefficients of the linear conditional expectations function

for any given sample. This estimator always estimates the coefficients of the linear conditional expectations function ![]() , and even if the conditional expectation function is not linear, the coefficients estimated by the OLS estimator recover the best linear prediction of

, and even if the conditional expectation function is not linear, the coefficients estimated by the OLS estimator recover the best linear prediction of ![]() given

given ![]() . This is a crucial point: when you estimate OLS, you always estimate the best linear prediction, which corresponds to the conditional mean if it can be described by a linear function. Any discussion of “misspecification” or “estimation bias” does (or at least: should!) not argue that we are unable to recover the linear prediction coefficients, but that we are unable to interpret these coefficients in a causal/desired way.

. This is a crucial point: when you estimate OLS, you always estimate the best linear prediction, which corresponds to the conditional mean if it can be described by a linear function. Any discussion of “misspecification” or “estimation bias” does (or at least: should!) not argue that we are unable to recover the linear prediction coefficients, but that we are unable to interpret these coefficients in a causal/desired way.

One thing we have only insufficiently addressed thus far is the quality of estimation. While the OLS estimator is not biased, and it is the “most efficient” estimator (lowest variance among the set of unbiased estimators), in practice, its variance (i.e., the expected squared error) can still be very high – just not as high as the one you would obtain with another estimator. Therefore, if you have a simple model ![]() and I tell you that OLS has been applied to obtain

and I tell you that OLS has been applied to obtain ![]() based on 10 data points, would you be confident to preclude that the true value of

based on 10 data points, would you be confident to preclude that the true value of ![]() is 0? And would you rule out that it is 1?

is 0? And would you rule out that it is 1?

To answer such questions, we need to address the uncertainty associated with our estimation. Only once we can quantify this uncertainty we will be able to perform meaningful inference on the estimates we obtained. To do so, we rely on the key concepts of convergence in probability and convergence in distribution established above.

In our context, the random variable will be the OLS estimator ![]() . Treating the observations

. Treating the observations ![]() as realizations of

as realizations of ![]() independent and identically distributed random variables

independent and identically distributed random variables ![]() , the OLS estimator is

, the OLS estimator is

![]()

To address the error we make in estimation, it is useful to consider the quantity ![]() . To express it in a useful way, we plug in

. To express it in a useful way, we plug in ![]() to the expression above, and we obtain

to the expression above, and we obtain

![Rendered by QuickLaTeX.com \[\begin{split} \hat \beta - \beta &= \left (X' X)^{-1} X'(X \beta + e\right) - \beta \\ & =(X' X)^{-1} X' e \end{split}\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-0985f6e1c179236c93b06bf549863935_l3.png)

We can represent it using a vector-sum notation over observations ![]() as (consider the structure of the design matrix

as (consider the structure of the design matrix ![]() to verify that this is true):

to verify that this is true):

![Rendered by QuickLaTeX.com \[ \hat \beta = \left (\frac{1}{n} \sum_{i=1}^n X_i X_i' \right )^{-1 }\frac{1}{n}\sum_{i=1}^n X_i e_i \]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-36eaa4159c2155ae15e30a5e9e492922_l3.png)

This is the quantity that we need to study in order to characterize the error we make in estimating ![]() using

using ![]() . To do so, we need to combine the Weak Law of Large Numbers and the Central Limit Theorem with one additional Theorem:

. To do so, we need to combine the Weak Law of Large Numbers and the Central Limit Theorem with one additional Theorem:

Theorem: Slutsky’s theorem.

Let ![]() and

and ![]() be sequences of random variables with

be sequences of random variables with ![]() and

and ![]() and

and ![]() . Then,

. Then,

![]()

and if ![]() is invertible with probability 1, then also

is invertible with probability 1, then also

![]()

Theorem: Asymptotic Distribution of the OLS Estimator.

Let ![]() be the OLS estimator and

be the OLS estimator and ![]() its expected value. Then,

its expected value. Then,

![]()

where ![]() is the variance matrix of

is the variance matrix of ![]()

Sketch of proof:

Step 0. For OLS, we assume that ![]() , which implies that

, which implies that ![]() . Using this moment condition, we can derive that

. Using this moment condition, we can derive that ![]() .

.

Step 1. By the law of large numbers,

![]()

Moreover, the law of large numbers gives

![]()

Step 2. Applying the central limit theorem,

![Rendered by QuickLaTeX.com \[ \frac{1}{\sqrt{n}} \sum_{i=1}^n X_i e_i = \sqrt{n} \left ( \frac{1}{n}\sum_{i=1}^n X_i e_i - \underbrace{\mathbb E[ X_i e_i]}_{=0}\right ) \overset d \to N(0, Var[ X_ie_i]). \]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-6584f282ddaa6b1c83dd62f970377459_l3.png)

The variance of the asymptotic distribution is

![]()

so that compactly,

![]()

Step 3. We have two components that correspond to the setup of Slutsky’s theorem. First, putting together the two results of step 1, we obtain the following corollary from Slutsky’s theorem (recalling that convergence in probability to a constant implies convergence in distribution to the same constant):

Corollary: OLS consistency.

For the OLS estimator ![]() , it holds that

, it holds that

![]()

and thus also ![]() . Therefore, we say that

. Therefore, we say that ![]() is a consistent estimator of

is a consistent estimator of ![]() .

.

This informs us that deviations of ![]() from

from ![]() will asymptotically be arbitrarily small with probability 1.

will asymptotically be arbitrarily small with probability 1.

Combining Slutsky’s theorem with the distribution of ![]() obtained above using the CLT, we obtain the distribution of

obtained above using the CLT, we obtain the distribution of ![]() :

:

![Rendered by QuickLaTeX.com \[ \sqrt{n}(\hat \beta - \beta) = \left (\underbrace{\frac{1}{n} \sum_{i=1}^n \tilde X_i \tilde X_i'}_{\overset p \to \mathbb E[\tilde X_i \tilde X_i']} \right )^{-1 }\underbrace{\frac{1}{\sqrt{n}}\sum_{i=1}^n \tilde X_i e_i}_{\overset d \to N(0, \mathbb E[\tilde X_i \tilde X_i ' e_i^2])} \hspace{0.5cm}\overset d \to \hspace{0.5cm} N(0, \mathbb E[\tilde X_i \tilde X_i']^{-1} \mathbb E[\tilde X_i \tilde X_i ' e_i^2]\mathbb E[\tilde X_i \tilde X_i']^{-1} ) \]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-7303d97d1c6f3ae966c5461d1744bac4_l3.png)

We define ![]() as the asymptotic variance of

as the asymptotic variance of ![]() .

.

q.e.d.

With this result, for sufficiently large samples, the distribution of ![]() is approximately equal to the one of a random variable with distribution

is approximately equal to the one of a random variable with distribution ![]() . This is useful because it also means that

. This is useful because it also means that

![]()

Here, ![]() is used to indicate that asymptotically, the distribution of

is used to indicate that asymptotically, the distribution of ![]() can be described as

can be described as ![]() . With some oversimplification, for large enough

. With some oversimplification, for large enough ![]() , the distribution of

, the distribution of ![]() is as good as indistinguishable from a normal distribution with mean

is as good as indistinguishable from a normal distribution with mean ![]() and variance

and variance

![]() .

.

The important bit of information obtained from this is that we now have an approximation to the finite sample variance of ![]() that is “good” if the sample is “large”. The matrix

that is “good” if the sample is “large”. The matrix ![]() is straightforward to estimate using averages instead of expectations, and replacing the unobserved

is straightforward to estimate using averages instead of expectations, and replacing the unobserved ![]() with its sample counterpart

with its sample counterpart ![]() :

:

![Rendered by QuickLaTeX.com \[ \hat \Sigma_{\hat\beta} = \left (\frac{1}{n}\sum_{i=1}^n \tilde X_i \tilde X_i'\right )^{-1}\frac{1}{n}\sum_{i=1}^n \tilde X_i \tilde X_i' \hat e_i^2 \left (\frac{1}{n}\sum_{i=1}^n \tilde X_i \tilde X_i'\right )^{-1}. \]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-dd266b2306a0ee61093dcd43de4febc2_l3.png)

In the matrix ![]() , the (1,1) element gives the estimated variance of

, the (1,1) element gives the estimated variance of ![]() , and the

, and the ![]() element the one of

element the one of ![]() , denoted as

, denoted as ![]() and

and ![]() , respectively. One can show that

, respectively. One can show that

![]()

This circumstance can be used to test hypotheses such as “![]() ” or “

” or “![]() “.

“.

To conclude the discussion of the linear model, we have introduced the concept of the conditional expectation, and the linear conditional expectation regression model. This model is also useful if the true conditional expectation is not linear, among others due to a Taylor approximation justification. In this model, coefficients can be interpreted as marginal effects of one variable on the outcome ![]() , holding the other variables constant. Therefore, it allows to describe the partial relationship between

, holding the other variables constant. Therefore, it allows to describe the partial relationship between ![]() and

and ![]() that is unexplained by third variables

that is unexplained by third variables ![]() . This model can be estimated using the OLS estimator, which could be derived from a simple unconstrained optimization problem. This estimator has powerful theoretical properties, and always estimates the best linear prediction of

. This model can be estimated using the OLS estimator, which could be derived from a simple unconstrained optimization problem. This estimator has powerful theoretical properties, and always estimates the best linear prediction of ![]() given

given ![]() . The practical exercise of the econometrician is usually not to allow for “consistent” estimation of the linear prediction (which is guaranteed), but to write down a linear prediction that is useful for studying a given practical issue at hand. Beyond estimation, we have covered inference, i.e. methods of quantifying estimation uncertainty, as well as their justification through large sample asymptotics. These methods allow to test for, and especially to reject certain values for the estimated parameters in the model.

. The practical exercise of the econometrician is usually not to allow for “consistent” estimation of the linear prediction (which is guaranteed), but to write down a linear prediction that is useful for studying a given practical issue at hand. Beyond estimation, we have covered inference, i.e. methods of quantifying estimation uncertainty, as well as their justification through large sample asymptotics. These methods allow to test for, and especially to reject certain values for the estimated parameters in the model.

If you feel like testing your understanding of the latter part of this chapter, you can take a short quiz found here.