An overview of this chapter’s contents and take-aways can be found here.

Table of Contents

Very frequently as economists, we have to deal with matrices because they are at the heart of (constrained) optimization problems where we have more than one variable, e.g. utility maximization given budget sets, cost-efficient production given an output target with multiple goods or deviation minimization in statistical analysis when considering multiple parameters. To engage in such analysis, we need to know a variety of matrix properties, what operations we can apply to one or multiple matrices, and very importantly, how to use matrices to solve systems of linear equations.

When considering such systems, a number of (frequently non-obvious) questions are

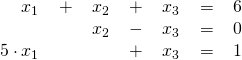

We will shortly define how matrices can be multiplied with each other and with vectors formally. For now, let us confine to the intuition of why matrices are a useful concept in the context of linear equation systems. Consider the system

which, by stacking the equations into a vector, we can re-write as

![Rendered by QuickLaTeX.com \[\begin{pmatrix} 1\cdot x_1 + 1\cdot x_2 + 1\cdot x_3\\ 0\cdot x_1 + 1\cdot x_2 + (-1)\cdot x_3 \\ 5\cdot x_1 + 0\cdot x_2 + 1\cdot x_3 \end{pmatrix} = \begin{pmatrix} 6 \\ 0 \\ 1\end{pmatrix} \hspace{0.5cm}\Leftrightarrow\hspace{0.5cm} Ax = \begin{pmatrix} 1 & 1 & 1\\ 0 & 1 & -1 \\ 5 & 0 & 1\end{pmatrix}\begin{pmatrix} x_1 \\ x_2 \\ x_3\end{pmatrix} = \begin{pmatrix} 6 \\ 0 \\ 1\end{pmatrix} =: b\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-0b9d7877c028e4ddddb112ac4dd07662_l3.png)

where ![]() and

and ![]() is the matrix on the LHS of the last equation. We will worry about why the equivalence holds after formally introducing matrix multiplication. Here, we see that generally, a system of

is the matrix on the LHS of the last equation. We will worry about why the equivalence holds after formally introducing matrix multiplication. Here, we see that generally, a system of ![]() equations in

equations in ![]() variables has a matrix representation with a coefficient matrix

variables has a matrix representation with a coefficient matrix ![]() of dimension

of dimension ![]() and a solution vector

and a solution vector ![]() . You may be familiar with a few characterizations of

. You may be familiar with a few characterizations of ![]() that help us to determine the number of solutions: In many cases, if

that help us to determine the number of solutions: In many cases, if ![]() , we can find at least one vector

, we can find at least one vector ![]() that solves the system, and if

that solves the system, and if ![]() , then there are generally infinitely many solutions. However, there is much more we can say about the solutions from looking at

, then there are generally infinitely many solutions. However, there is much more we can say about the solutions from looking at ![]() , and how exactly this works will be an central aspect of this chapter.

, and how exactly this works will be an central aspect of this chapter.

Because we want to do mathematical analysis with matrices, a first crucial step is to make ourselves aware of the algebraic structure that we attribute to a set of matrices with given dimensions that allow to perform mathematical basis operations (addition and scalar multiplication), that serve as ground for any further analysis we will eventually engage in. As a first step, let us formally consider what a matrix is:

Definition: Matrix of dimension ![]() .

.

Let ![]() be a collection of elements from a basis set

be a collection of elements from a basis set ![]() , i.e.

, i.e. ![]() . Then, the matrix

. Then, the matrix ![]() of these elements is the ordered two-dimensional collection

of these elements is the ordered two-dimensional collection

![Rendered by QuickLaTeX.com \[A = \begin{pmatrix} a_{11} & a_{12} & \cdots & a_{1m} \\ a_{21} & a_{22} & \cdots & a_{2m} \\ \vdots & \vdots & \ddots & \vdots \\ a_{n1} & a_{n2} & \cdots & a_{nm} \end{pmatrix}. \]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-734ef9804b90dcf4da712edc274b6b86_l3.png)

We call ![]() the row dimension of

the row dimension of ![]() and

and ![]() the column dimension of

the column dimension of ![]() . We write

. We write ![]() . As an alternative notation, one may write

. As an alternative notation, one may write ![]() , or, if

, or, if ![]() ,

, ![]() .

.

In the following, we restrict attention to ![]() , so that the

, so that the ![]() are real numbers. Note however that this need not be the case; indeed, an important concept in econometrics are so-called block-matrices

are real numbers. Note however that this need not be the case; indeed, an important concept in econometrics are so-called block-matrices ![]() where the

where the ![]() are matrices of real numbers, and for derivatives, we frequently consider matrices of (derivative) operators, that is, functions, as opposed to numbers. Moreover, the entries can be random variables (in econometrics), functions, and even operators, as we will see in chapter 3.

are matrices of real numbers, and for derivatives, we frequently consider matrices of (derivative) operators, that is, functions, as opposed to numbers. Moreover, the entries can be random variables (in econometrics), functions, and even operators, as we will see in chapter 3.

To apply the vector space concept to matrices, note that matrices of real numbers can be viewed as a generalization of real vectors: a vector ![]() is simply a matrix of dimension

is simply a matrix of dimension ![]() . We now consider objects that may have multiple columns, or respectively, stack multiple vectors in an ordered fashion. Thus, when

. We now consider objects that may have multiple columns, or respectively, stack multiple vectors in an ordered fashion. Thus, when ![]() are the columns of a matrix

are the columns of a matrix ![]() , we may also write

, we may also write ![]() (an alternative, yet less frequently used notation stacks the rows of

(an alternative, yet less frequently used notation stacks the rows of ![]() on top of each other). Therefore, a natural way of defining addition and scalar multiplication for matrices is to apply the operators of the real vector context “element”-wise, i.e. for each column separately:

on top of each other). Therefore, a natural way of defining addition and scalar multiplication for matrices is to apply the operators of the real vector context “element”-wise, i.e. for each column separately:

Definition: Addition of Matrices.

For two matrices ![]() and

and ![]() of identical dimension, i.e.

of identical dimension, i.e. ![]() and

and ![]() , their sum is obtained from addition of their elements, that is

, their sum is obtained from addition of their elements, that is

![]()

Note that to conveniently define addition, we have to restrict attention to matrices of the same dimension! This already means that we will consider not the whole universe of matrices as a vector space, but each potential specific combination of dimensions separately.

Definition: Scalar Multiplication of Matrices.

Let ![]() and

and ![]() . Then, scalar multiplication of

. Then, scalar multiplication of ![]() with

with ![]() is defined element-wise, that is

is defined element-wise, that is

![]()

From chapter 1, we know that for graphical and also formal intuition, it is very useful if some algebraic structure constitutes a vector space, because then, we can deal with it very “similar” to the way we work with real numbers. Indeed, the set of matrices of given dimensions ![]() , together with addition and scalar multiplication as we just defined, constitutes a real vector space:

, together with addition and scalar multiplication as we just defined, constitutes a real vector space:

Theorem: Vector Space of Matrices.

The set of all ![]() matrices,

matrices, ![]() together with the algebraic operations matrix addition and multiplication with a scalar as defined above defines a vector space.

together with the algebraic operations matrix addition and multiplication with a scalar as defined above defines a vector space.

While we will not be concerned much with distances of matrices in this course, defining them in accordance with the previous chapter is indeed possible: the matrix norm commonly used is very similar to the maximum-norm defined earlier:

![]()

Should you be curious or feel that you need some more practice with the norm concept, you can try to verify that this indeed constitutes a norm, checking all 3 parts of the norm definition.

Before we move to deeper analysis of matrices and their usefulness for the purpose of the economist, some review and introduction of important vocabulary is necessary. In this section, you can find a collection of the most central terminology for certain special characteristics matrices may have.

First, when characterizing matrices, it may be worthwhile to think about when we say that two matrices are equal.

Definition: Matrix Equality.

The matrices ![]() and

and ![]() are said to be equal if and only if (i) they have the same dimension, i.e.

are said to be equal if and only if (i) they have the same dimension, i.e. ![]() , and (ii) all their elements are equal, i.e.

, and (ii) all their elements are equal, i.e. ![]() .

.

Note especially that it is thus not sufficient that e.g. all elements equal the same value, so that the matrices ![]() and

and ![]() are not equal.

are not equal.

Definition: Transposed Matrix.

Let ![]() . Then, the transpose of

. Then, the transpose of ![]() is the matrix

is the matrix ![]() where

where ![]() ,

, ![]() . Alternative notations are

. Alternative notations are ![]() or

or ![]() .

.

Thus, the transpose ![]() “inverts” the dimensions, or respectively, it stacks the columns of

“inverts” the dimensions, or respectively, it stacks the columns of ![]() in its rows (or equivalently, the rows of

in its rows (or equivalently, the rows of ![]() in its columns). Note that dimensions “flip”, i.e. that if

in its columns). Note that dimensions “flip”, i.e. that if ![]() is of dimension

is of dimension ![]() , then

, then ![]() is of dimension

is of dimension ![]() . An example is given below in equation (1).

. An example is given below in equation (1).

Definition: Row and Column Vectors.

Let ![]() . If

. If ![]() ,

, ![]() is called a row vector, and a column vector if

is called a row vector, and a column vector if ![]() . By convention, one also calls a column vector simply vector.

. By convention, one also calls a column vector simply vector.

According to this definition, as we also did before with vectors in ![]() , when you just read the word “vector”, you should think of a column vector.

, when you just read the word “vector”, you should think of a column vector.

Definition: Zero Matrices.

The zero matrix of dimension ![]() , denoted

, denoted ![]() , is the

, is the ![]() matrix where every entry is equal to zero.

matrix where every entry is equal to zero.

Definition: Square Matrix.

Let ![]() . Then, if

. Then, if ![]() ,

, ![]() is said to be a square matrix.

is said to be a square matrix.

Definition: Diagonal Matrix.

A square matrix ![]() is said to be a diagonal matrix if all of its off-diagonal elements are zero, i.e.

is said to be a diagonal matrix if all of its off-diagonal elements are zero, i.e. ![]() . We write

. We write ![]() .

.

Note that the diagonal elements ![]() ,

, ![]() need not be non-zero for

need not be non-zero for ![]() to be labeled “diagonal”, and thus, e.g. the zero matrix is diagonal, and so is

to be labeled “diagonal”, and thus, e.g. the zero matrix is diagonal, and so is ![]() .

.

Definition: Upper and Lower Triangular Matrix.

A square matrix ![]() is said to be upper triangular if

is said to be upper triangular if ![]() , i.e. when the entry

, i.e. when the entry ![]() equals zero whenever it lies below the diagonal. Conversely,

equals zero whenever it lies below the diagonal. Conversely, ![]() is said to be lower triangular if

is said to be lower triangular if ![]() is upper triangular, i.e.

is upper triangular, i.e. ![]() .

.

Rather than studying the definition, the concept may be more straightforward to grasp by just looking at an upper triangular matrix:

(1)

From its transpose, you can see why the transposition concept is used in the definition for the lower triangular matrix.

Definition: Symmetric Matrix.

A square matrix ![]() is said to be symmetric if

is said to be symmetric if ![]() .

.

Equivalently, symmetry is characterized by coincidence of ![]() and its transpose

and its transpose ![]() , i.e.

, i.e. ![]() .

.

Definition: Identity Matrices.

The ![]() identity matrix, denoted

identity matrix, denoted ![]() , is a diagonal matrix with all its diagonal elements equal to 1.

, is a diagonal matrix with all its diagonal elements equal to 1.

Again, the concept may be more quickly grasped visually:

![Rendered by QuickLaTeX.com \[ \mathbf{I_n} = \begin{pmatrix} 1&0&\cdots&0\\ 0&1&\ddots&\vdots\\ \vdots&\ddots&\ddots&0\\ 0&\cdots&0&1\end{pmatrix}.\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-fdbb6ad0bbebebd382e90dc91a32bbed_l3.png)

To check your understanding and to get more familiar with the concepts, think about the following questions:

Now that we have laid the formal foundation by introducing the vector spaces of matrices of certain dimension and made ourselves familiar with a variety of important matrices, it is time to take a closer look on how we do calculus with matrices beyond the basis operations. Similar to the scalar product discussed for vectors, we first should know how to multiply two elements of the vector space with each other, rather than just one element with a scalar:

Definition: Matrix Product.

Consider two matrices ![]() and

and ![]() so that the column dimension of

so that the column dimension of ![]() is equal to the row dimension of

is equal to the row dimension of ![]() . Then, the matrix

. Then, the matrix ![]() of column dimension equal to the one of

of column dimension equal to the one of ![]() and row dimension equal to the one of

and row dimension equal to the one of ![]() is called the product of

is called the product of ![]() and

and ![]() , denoted

, denoted ![]() , if

, if ![]() .

.

As made clear by the bold text, matrix multiplication is subject to a compatibility condition, that differs from the one discussed before for addition. Thus, not all matrices that can be multiplied with each other can also be added, and the converse is also true.

To see just how closely this concept relates to the scalar product, write ![]() in row notation and

in row notation and ![]() in column notation, i.e. let

in column notation, i.e. let ![]() be row vectors such that

be row vectors such that  and

and ![]() column vectors so that

column vectors so that ![]() . Then,

. Then,

![Rendered by QuickLaTeX.com \[AB = \begin{pmatrix}a_1'\cdot b_1 &a_1'\cdot b_2 & \cdots & a_1'\cdot b_k \\ a_2'\cdot b_1 &a_2'\cdot b_2 & \ddots & \vdots \\ \vdots & \ddots & \ddots & a_{n-1}'\cdot b_k \\ a_n'\cdot b_1 & \cdots & a_n'\cdot b_{k-1} & a_n'\cdot b_k, \end{pmatrix}\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-047c5c9037958dbe6bcc64c658ce403b_l3.png)

and the matrix product emerges just as an ordered collection of the scalar products of ![]() ‘s rows with

‘s rows with ![]() ‘s columns!

‘s columns!

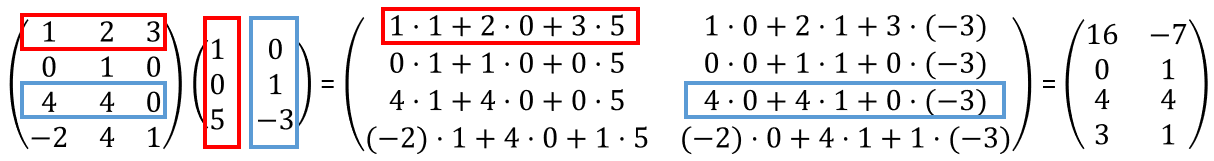

It may require some practice to confidently handle matrix multiplication, but if you aren’t already, you should become familiar with it rather quickly. Here is an example that visually illustrates how the entries in the matrix product come to be:

If you try to multiply this expression the other way round, you will quickly see that this doesn’t work: recall that the “inner” dimensions needed to coincide, so if ![]() is

is ![]() ,

, ![]() must be

must be ![]() for the product to exist. Thus,

for the product to exist. Thus, ![]() and

and ![]() exist if and only if the matrices are square and of equal dimension! And even then, it will generally not hold that

exist if and only if the matrices are square and of equal dimension! And even then, it will generally not hold that ![]() .

.

Besides its complicated look, matrix multiplication does have some desirable properties:

Theorem: Associativity and Distributivity of the Product.

Assuming that ![]() are matrices of appropriate dimension, the product for matrices is

are matrices of appropriate dimension, the product for matrices is

In terms of computing a matrix product, this especially means that the order in which you multiply matrices with each other does not matter. The theorem tells us that standard rules related to addition and multiplication continue to hold for matrices, e.g. when ![]() and

and ![]() are matrices of appropriate dimension, then

are matrices of appropriate dimension, then ![]() . An exception is of course that, as discussed above, commutativity of multiplication is not guaranteed.

. An exception is of course that, as discussed above, commutativity of multiplication is not guaranteed.

It is noteworthy that the zero and identity element in the matrix space can be dealt with in a fashion very similar to the numbers 0 and 1 in ![]() :

:

Theorem: Zero and Identity matrix.

Let ![]() . Then,

. Then,

Be sure to carefully think about where the dimensions of the zero and identity matrices come from, i.e. why they are chosen like this in the theorem! From this, take away that zero and identity matrix work in the way you would expect them to, and that there are no extraordinary features to be taken into account. Further useful properties of matrix operations are

Theorem: Transposition, Sum, and Product.

![]()

![]()

While the former two points are more or less obviously useful, the third may appear odd; isn’t this obvious?! Why should it be part of a theorem? Well, the practical use is that frequently, this can be used to achieve a more convenient representation of complex expressions. For instance, in econometrics, when ![]() denotes a vector of linear regression model coefficients (if you are not familiar with this expression, it’s not too important what precisely this quantity is right now, just note that

denotes a vector of linear regression model coefficients (if you are not familiar with this expression, it’s not too important what precisely this quantity is right now, just note that ![]() , where

, where ![]() is the number of model variables), the squared errors in the model

is the number of model variables), the squared errors in the model ![]() (

(![]() random variable,

random variable, ![]() random vector of length

random vector of length ![]() ) that are of interest to estimator choice are

) that are of interest to estimator choice are

![]()

Now, when taking expectations of such an expression (summand-wise), we want the non-random parameters (here: ![]() ) to either be at the beginning or the end of an expression. For the last one, this is not immediately the case:

) to either be at the beginning or the end of an expression. For the last one, this is not immediately the case: ![]() . However, noting that

. However, noting that ![]() is scalar,

is scalar, ![]() (with Point 2. in the Theorem), and thus,

(with Point 2. in the Theorem), and thus, ![]() , an expression that we can handle well also when considering the expected value.

, an expression that we can handle well also when considering the expected value.

As a final remark on notation, note that we can use matrices (and vectors as special examples of them) for more compact representations. Consider the problem of estimating the parameter ![]() that we just considered above. Here, our standard go-to choice is the ordinary least squares (OLS) estimator

that we just considered above. Here, our standard go-to choice is the ordinary least squares (OLS) estimator ![]() , which minimizes the sum of squared residuals in prediction of

, which minimizes the sum of squared residuals in prediction of ![]() using the information

using the information ![]() and the prediction vector

and the prediction vector ![]() over all possible prediction vectors

over all possible prediction vectors ![]() ‘s:

‘s:

![Rendered by QuickLaTeX.com \[ SSR(b) = \sum_{i=1}^n (y_i - x_i'b)^2 = \sum_{i=1}^n u_i(b)^2.\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-02b5479743e97e2b8b24a303fa44b5d9_l3.png)

When defining ![]() , we can simply write

, we can simply write ![]() . Generally, note that the scalar product of a vector with itself is just the sum of squares:

. Generally, note that the scalar product of a vector with itself is just the sum of squares:

![Rendered by QuickLaTeX.com \[\forall v=(v_1,\ldots,v_n)'\in\mathbb R^n: v'v=\sum_{j=1}^nv_j^2.\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-26902962897f513432882116a1d04246_l3.png)

Similarly, this applies to sums of matrices and sums of vector products.

This concludes our introductory discussion of matrices and matrix algebra. If you feel like testing your understanding of the concepts discussed thus far, you can take a short quiz found here.

Re-consider the system of linear equations discussed earlier in this chapter. Here, you saw that stacking the equations into a vector, one could arrive at a matrix representation with just one equality sign, characterized by

(2) ![]()

where ![]() is a matrix of LHS coefficients of the variables

is a matrix of LHS coefficients of the variables ![]() stacked in

stacked in ![]() , and

, and ![]() is the vector of RHS “result” coefficients. In the case of the example above, the specific system is

is the vector of RHS “result” coefficients. In the case of the example above, the specific system is

![Rendered by QuickLaTeX.com \[\underbrace{\begin{pmatrix} 1 & 1 & 1\\ 0 & 1 & -1 \\ 5 & 0 & 1\end{pmatrix}}_{=A}\underbrace{\begin{pmatrix} x_1 \\ x_2 \\ x_3\end{pmatrix}}_{=x} = \underbrace{\begin{pmatrix} 6 \\ 0 \\ 1\end{pmatrix}}_{=b}.\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-a6b22c0ad03addc74b28510257c45428_l3.png)

As an exercise of how matrix multiplication works, you can multiply out ![]() in this example and verify that

in this example and verify that ![]() is indeed equivalent to the system of individual equations.

is indeed equivalent to the system of individual equations.

Recall that our central questions to this equation system were:

Thus, the issue at hand is to characterize the solution(s) for ![]() , i.e. the vectors

, i.e. the vectors ![]() that satisfy equation (2), ideally in a computationally tractable way.

that satisfy equation (2), ideally in a computationally tractable way.

As always, let us get an intuition for the things we don’t know starting from something we know: if ![]() and

and ![]() were real numbers and

were real numbers and ![]() was unequal to zero, you immediately know how to solve for

was unequal to zero, you immediately know how to solve for ![]() : just bring

: just bring ![]() to the other side by dividing by it. If instead

to the other side by dividing by it. If instead ![]() (i.e.

(i.e. ![]() can not be “inverted”), you know that there is no solution for

can not be “inverted”), you know that there is no solution for ![]() if

if ![]() , but if

, but if ![]() , there are a great variety of solutions for

, there are a great variety of solutions for ![]() — indeed, every

— indeed, every ![]() would solve the equation. The idea is very similar with matrix equations, we just need a slightly different or respectively more general notion of “dividing by” and “invertibility”.

would solve the equation. The idea is very similar with matrix equations, we just need a slightly different or respectively more general notion of “dividing by” and “invertibility”.

Similar to calculus with real numbers, we can define a multiplicative inverse for some ![]() :

:

Definition: Inverse Matrices.

Consider two invertible square matrices ![]() . Then,

. Then, ![]() is called the inverse of

is called the inverse of ![]() if

if ![]() . We write

. We write ![]() so that

so that ![]() .

.

As with real numbers, we can show that the multiplicative inverse is unique, i.e. that for every ![]() , there exists at most one inverse matrix

, there exists at most one inverse matrix ![]() :

:

Proposition: Uniqueness of the Inverse Matrix.

Let ![]() and suppose that the inverse of

and suppose that the inverse of ![]() exists. Then, the inverse of

exists. Then, the inverse of ![]() is unique.

is unique.

However, existence is not guaranteed, and in contrast to the real numbers, more than a single element (![]() ) will be non-invertible in the space

) will be non-invertible in the space ![]() . Existence of the inverse is rigorously discussed below. For now, you should take away that the easiest case of a system of linear equations is one where the matrix

. Existence of the inverse is rigorously discussed below. For now, you should take away that the easiest case of a system of linear equations is one where the matrix ![]() is invertible. Indeed, the following sufficient condition is what economists rely on most of the time when solving linear equation systems:

is invertible. Indeed, the following sufficient condition is what economists rely on most of the time when solving linear equation systems:

Proposition: Invertibility of Unique Solution Existence.

Consider a system of linear equations ![]() with unknowns

with unknowns ![]() and coefficients

and coefficients ![]() ,

, ![]() . Then, if

. Then, if ![]() and

and ![]() is invertible, the system has a unique solution given by

is invertible, the system has a unique solution given by ![]() .

.

To see this, suppose ![]() is invertible, and that

is invertible, and that ![]() solves the system. Then,

solves the system. Then,

![]()

As discussed above, if we can invert ![]() , we can just bring it to the other side, this is exactly the same principle as with a single equation with real numbers. For this sufficient condition to be applicable, we must have that

, we can just bring it to the other side, this is exactly the same principle as with a single equation with real numbers. For this sufficient condition to be applicable, we must have that ![]() for

for ![]() to be square, i.e. we must have as many equations as unknowns. It may also be worthwhile to keep in mind that the converse of the Proposition above is also true: If

to be square, i.e. we must have as many equations as unknowns. It may also be worthwhile to keep in mind that the converse of the Proposition above is also true: If ![]() has a unique solution and

has a unique solution and ![]() is square, then

is square, then ![]() is invertible.

is invertible.

While we have just seen the value of the inverse matrix in solving linear equation systems, we do not yet know how we determine whether ![]() can be inverted and if so, how to determine the inverse — so let us focus on these issues now.

can be inverted and if so, how to determine the inverse — so let us focus on these issues now.

First, some helpful relationships for inverse matrices are:

Proposition: Invertibility of Derived Matrices.

Suppose that ![]() are invertible. Then,

are invertible. Then,

To get more familiar with inverse matrices, let us briefly consider why this proposition is true:

For the first point, note that ![]() . Thus,

. Thus,

![]()

Therefore, ![]() is the inverse matrix of

is the inverse matrix of ![]() .

.

For the second point, by associativity of the matrix product,

![]()

For the third, for any ![]() ,

, ![]() is a

is a ![]() matrix, and we know that it is invertible with

matrix, and we know that it is invertible with ![]() . Thus, the third point is a direct implication of the second.

. Thus, the third point is a direct implication of the second.

An important corollary is obtained by iterating on the second point:

Corollary: Invertibility of Long Matrix Products.

For any ![]() , if

, if ![]() are invertible, then

are invertible, then ![]() is invertible with inverse

is invertible with inverse ![]() .

.

While this proposition and its corollary are very helpful, they still assume invertibility of some initial matrices, and therefore do not fundamentally solve the “when-and-how” problem of matrix inversion. In this course, we first focus on the “when”, i.e. the conditions under which matrices can be inverted. Here, there are four common possible approaches: the determinant, the rank, the eigenvalues and the definiteness of the matrix.

Before introducing and discussing these concepts, it is instructive to make oneself familiar with the elementary operations for matrices. Not only are they at the heart of solving systems of linear equations, but they also interact closely with all concepts discussed next.

Let’s begin with the definition:

Definition: Elementary Matrix Operations.

For a given matrix  with rows

with rows ![]() , consider an operation on

, consider an operation on ![]() that changes the matrix to

that changes the matrix to ![]() , i.e.

, i.e. ![]() where

where  . The three elementary matrix operations are

. The three elementary matrix operations are

To increase tractability of what we do, the following is very helpful:

Proposition: Matrix Representation of Elementary Operations.

Every elementary operation on a matrix ![]() can be expressed as left-multiplication a square matrix

can be expressed as left-multiplication a square matrix ![]() to

to ![]() .

.

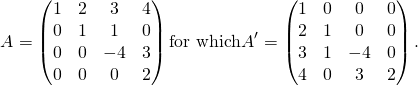

To see these rather abstract characterizations in a specific example, consider a system with 4 rows and suppose that we use (E1) to exchange rows 1 and 3, (E2) to multiply the fourth row by 5 and (E3) to add row 2, multiplied by 2, to row 3. Then, the associated matrices are

![Rendered by QuickLaTeX.com \[E^1 = \begin{pmatrix} 0 & 0 & 1 & 0\\ 0&1 & 0 & 0\\ 1 & 0 & 0 & 0\\ 0 & 0 & 0 & 1 \end{pmatrix}, \hspace{0.5cm}\Leftrightarrow\hspace{0.5cm} E^2 = \begin{pmatrix} 1 & 0 & 0 & 0\\ 0&1 & 0 & 0\\0 & 0 & 1 & 0\\ 0 & 0 & 0 & 5 \end{pmatrix}, \hspace{0.5cm}\Leftrightarrow\hspace{0.5cm} E^3 = \begin{pmatrix} 1 & 0 & 0 & 0\\ 0&1 & 0 & 0\\0 & 2 & 1 & 0\\ 0 & 0 & 0 & 1 \end{pmatrix}. \]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-f1eb7d892863bf0e69d713e4b7415fe9_l3.png)

If you have some time to spare, it may be a good exercise to come up with some examples and verify that this matrix-approach indeed does what we want it to do.

As stated above, the practical value of this proposition lies in the fact that when we bring a matrix ![]() to another matrix

to another matrix ![]() using the elementary operations

using the elementary operations ![]() , where the index

, where the index ![]() of

of ![]() indicates that

indicates that ![]() was the

was the ![]() -th operation applied, then we can write

-th operation applied, then we can write

![]()

This increases tractability of the process of going from ![]() to

to ![]() , a fact which we will repeatedly exploit below.

, a fact which we will repeatedly exploit below.

The key feature of the elementary operations is that one may use them to bring any square matrix to upper triangular form as defined in the previous section (more generally, any arbitrary matrix can be brought to a “generalized” upper triangular form – since we only deal with square matrices here, we need not bother too much with this result, though):

Theorem: Triangulizability of a Matrix.

Consider a matrix ![]() . Then, if

. Then, if ![]() ,

, ![]() can be brought to upper triangular form applying only elementary operations to

can be brought to upper triangular form applying only elementary operations to ![]() .

.

This is helpful because, as will emerge, both the determinant and rank invertibility condition hold for an initial matrix if and only if they hold for an upper triangular matrix obtained from applying elementary operations to ![]() . You can find the details of why this theorem applies in the companion script. For now, it is enough to know that this works. How a given matrix can be triangularized will be studied once we turn to the Gauss-Jordan algorithm, our go-to procedure that we use for matrix inversion.

. You can find the details of why this theorem applies in the companion script. For now, it is enough to know that this works. How a given matrix can be triangularized will be studied once we turn to the Gauss-Jordan algorithm, our go-to procedure that we use for matrix inversion.

So, how do elementary operations help us when thinking about the inverse of a matrix? The connection is stunningly simple: suppose that we can bring a square ![]() matrix

matrix ![]() not only to triangular, but diagonal form with an all non-zero diagonal using the operations

not only to triangular, but diagonal form with an all non-zero diagonal using the operations ![]() , i.e.

, i.e.

![Rendered by QuickLaTeX.com \[E_k\cdot\ldots\cdot E_1 A = \begin{pmatrix} \lambda_1 & 0 & \cdots & 0\\ 0 & \lambda_2 & \ddots & \vdots\\ \vdots & \ddots & \ddots & 0\\ 0 & \cdots & 0 & \lambda_k\end{pmatrix} = \text{diag}\{\lambda_1,\ldots,\lambda_n\}\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-8a7e6f75df94fccf99de049a5086b409_l3.png)

where ![]() . Then, multiplying all columns

. Then, multiplying all columns ![]() by

by ![]() , summarized by

, summarized by ![]() , with

, with ![]() , one has

, one has ![]() . This is very convenient: the transformation matrix

. This is very convenient: the transformation matrix ![]() is precisely what we call the inverse matrix of

is precisely what we call the inverse matrix of ![]() , i.e.

, i.e. ![]() ! Thus, we can summarize the following:

! Thus, we can summarize the following:

Proposition: Invertibility and Identity Transformation.

If we can use elementary operations ![]() to bring a matrix

to bring a matrix ![]() to the identity matrix, then

to the identity matrix, then ![]() is invertible with inverse

is invertible with inverse ![]() where

where ![]() .

.

Indeed, we will see later that the converse is also true. As you will see in the last section, the ensuing result is what we base upon our method of computing inverse matrices, the Gauß-Jordan algorithm: to compute the matrix ![]() , note that

, note that ![]() , so that when bringing an invertible matrix

, so that when bringing an invertible matrix ![]() to identity form, we just have to apply the same operations in the same order to the identity matrix to arrive at the inverse! Don’t worry if this sounds technical, it’s not, hopefully, the examples we will see later will convince you of this.

to identity form, we just have to apply the same operations in the same order to the identity matrix to arrive at the inverse! Don’t worry if this sounds technical, it’s not, hopefully, the examples we will see later will convince you of this.

Before moving on, let us briefly consider why we cared about the upper triangular matrix in the first place – after all, it is the diagonal matrix with the ![]() ‘s that we want, isn’t it?! Well, the thing is, once we arrive at the triangular matrix we are basically done: suppose that, starting from some arbitrary matrix

‘s that we want, isn’t it?! Well, the thing is, once we arrive at the triangular matrix we are basically done: suppose that, starting from some arbitrary matrix ![]() , we have arrived at the triangular matrix

, we have arrived at the triangular matrix

![Rendered by QuickLaTeX.com \[ \begin{pmatrix} 2 & 9 & 3\\ 0 & 4 & -2\\ 0 & 0 & 5 \end{pmatrix}. \]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-680ef0f2cc44499b26c5180256a38daa_l3.png)

Then, multiplying the last row with ![]() and subsequently adding (1)

and subsequently adding (1) ![]() times the last row to row 2 and (2)

times the last row to row 2 and (2) ![]() times the last row to row 1 gives

times the last row to row 1 gives

![Rendered by QuickLaTeX.com \[ \begin{pmatrix} 2 & 9 & 0\\ 0 & 4 & 0\\ 0 & 0 & 1 \end{pmatrix}. \]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-b9ebc2adc5d90f2e8b6d4019ee4c80f6_l3.png)

Next, multiplying the second row with ![]() and subsequently adding it

and subsequently adding it ![]() times to the first gives the desired diagonal. With a bit more technicality and notation, this line of reasoning generalizes to upper triangular matrices of any dimension.

times to the first gives the desired diagonal. With a bit more technicality and notation, this line of reasoning generalizes to upper triangular matrices of any dimension.

Thus, we can summarize: If a matrix can be brought to upper triangular form with an all non-zero diagonal using elementary operations, it can also be brought to the identity matrix using elementary operations, and therefore inverted. Because any matrix can be triangularized, we can test invertability by checking if the associated triangular form has zeros on the diagonal.

Now, it is time to turn to our most common matrix invertibility check, the determinant. The very first thing to note about the determinant concept is that ONLY square matrices have a determinant!, i.e. that for any non-square matrix, this quantity is NOT defined!

To give some intuition on the determinant, it may be viewed as a kind of “matrix magnitude” coefficient similar to the one we studied in the vector case, which augments a vector’s directionality. This intuition is helpful because it turns out that as with real numbers, we can invert anything that is of non-zero magnitude — i.e. any matrix with non-zero determinant! However, note that unlike with a vector, a matrix with non-zero entries can have a zero determinant and thus have “zero magnitude”, so that this reasoning should be viewed with some caution.

The general definition of the determinant is unnecessarily complex for our purposes. We will define the determinant recursively here, that is, we first define it for simple ![]() matrices, and then express the determinant of an

matrices, and then express the determinant of an ![]() matrix as a function of that of several

matrix as a function of that of several ![]() matrices, which each can be expressed with the help of determinants of

matrices, which each can be expressed with the help of determinants of ![]() matrices, and so on. It is conceptually more straightforward and sufficient for the use you will make of them.

matrices, and so on. It is conceptually more straightforward and sufficient for the use you will make of them.

Definition: Determinant.

Let ![]() . Then, for the determinant of

. Then, for the determinant of ![]() , denoted

, denoted ![]() or

or ![]() , we define

, we define

![Rendered by QuickLaTeX.com \[A = \begin{pmatrix} a_{11} & a_{12} & \cdots & a_{1n}\\ a_{21} & a_{22} & \cdots & a_{2n}\\\vdots & \vdots & \ddots & \vdots \\a_{n1} & a_{n2} &\cdots & a_{nn}\end{pmatrix},\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-46659a50e6f7f3f7d6305db3edb84c0d_l3.png)

![]() with

with ![]() and

and ![]() as the matrix resulting from eliminating row

as the matrix resulting from eliminating row ![]() and column

and column ![]() from

from ![]() , i.e.

, i.e.

![Rendered by QuickLaTeX.com \[A_{-ij} = \begin{pmatrix} a_{11} & \cdots & a_{1,j-1} & a_{1,j+1}&\cdots & a_{1n}\\ \vdots & & \vdots & \vdots &&\vdots\\ a_{i-1,1} & \cdots & a_{i-1,j-1} & a_{i-1,j+1}&\cdots & a_{i-1,n}\\ a_{i+1,1} & \cdots & a_{i+1,j-1} & a_{i+1,j+1}&\cdots & a_{i+1,n}\\ \vdots & & \vdots & \vdots &&\vdots\\ a_{n1} & \cdots & a_{n,j-1} & a_{n,j+1}&\cdots & a_{nn}\\ \end{pmatrix}.\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-48f06d8ddb5f2a453d8c2a1b6001a874_l3.png)

This definition allows to obtain the determinant for any arbitrary ![]() matrix by decomposing the

matrix by decomposing the ![]() ‘s into smaller matrices until we have just

‘s into smaller matrices until we have just ![]() matrices, i.e. scalars, where we can determine the determinant using 1. The reason why there is the index

matrices, i.e. scalars, where we can determine the determinant using 1. The reason why there is the index ![]() in 2. is the following relationship:

in 2. is the following relationship:

Theorem: Laplace Expansion.

For any ![]() , it holds that

, it holds that

![Rendered by QuickLaTeX.com \[\det(A) = \sum_{j=1}^n (-1)^{i^*+j}a_{i^*j}\det(A_{-i^*j}) = \sum_{i=1}^n (-1)^{i+j^*}a_{ij^*}\det(A_{-ij^*}).\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-846ac2d357a1140838edf12f8b37108e_l3.png)

Typically, we would write down the definition without the use of the star, but I put it there to emphasize that the respective index is fixed. If we fix a row index ![]() to calculate

to calculate ![]() , we call the computation method a Laplace expansion by the

, we call the computation method a Laplace expansion by the ![]() -th row, if we fix the column index

-th row, if we fix the column index ![]() , we call it a column expansion by the

, we call it a column expansion by the ![]() -th column. This method is the general way how we compute determinants. However, it is quite computationally extensive, and luckily, most matrices that we deal with analytically (rather than with a computer who doesn’t mind lengthy calculations) are of manageable size where we have formulas for the determinant.

-th column. This method is the general way how we compute determinants. However, it is quite computationally extensive, and luckily, most matrices that we deal with analytically (rather than with a computer who doesn’t mind lengthy calculations) are of manageable size where we have formulas for the determinant.

Proposition: Determinants of “small” Matrices.

, then

, then

For 1., we can understand the rule using e.g. the Laplace expansion by the first row: note that ![]() and

and ![]() . Thus, we have

. Thus, we have

![Rendered by QuickLaTeX.com \[\begin{split}\det(A) &= \sum_{j=1}^2 (-1)^{1+j}a_{1j}\det(A_{-1j}) = (-1)^{2}a_{11}\det(A_{-11}) + (-1)^{3}a_{12}\det(A_{-12}) \\&= 1\cdot a\cdot \det(d) + (-1)\cdot b\cdot \det(c) = ad-bc. \end{split}\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-2b2a08002688374d81780bd593840d47_l3.png)

As an exercise and to convince you of the Laplace expansion, try expanding by the second column and verify that you get the same formula. You can also try to verify point 2. of this proposition using the Laplace expansion method if you desire some practice.

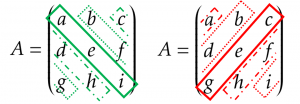

Graphically, we can think about the 3×3-determinant as adding the right-diagonal products and subtracting the left-diagonal products:

These two results are typically enough for most of our applications, and if not, they at least allow us to break the determinant down to ![]() rather than

rather than ![]() matrices using the Laplace expansion method, for which we can directly compute the determinants.

matrices using the Laplace expansion method, for which we can directly compute the determinants.

Equipped with these rules, two comments on the Laplace method deem worthwhile. First, when we have a row or column containing only one non-zero entry, we can reduce the dimension of determinant computation avoiding a sum: consider the lower-triangular matrix

![Rendered by QuickLaTeX.com \[A = \begin{pmatrix} 5&2&3&4\\ 0&1&1&0\\ 0&0&-4&3\\ 0&0&0&2\end{pmatrix}.\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-dd079ba34aaa58a297c7f6433f5b7e60_l3.png)

The Laplace-expansion for the first column is ![]() . However, for any

. However, for any ![]() ,

, ![]() , so that the expression reduces to

, so that the expression reduces to ![]() . Applying the

. Applying the ![]() -rule, it results that

-rule, it results that ![]() . Second, for triangular matrices generally, the determinant is given by the trace.

. Second, for triangular matrices generally, the determinant is given by the trace.

Definition: Trace of a Square Matrix.

For a matrix ![]() , the trace

, the trace ![]() is given by the product of diagonal elements, i.e.

is given by the product of diagonal elements, i.e. ![]() .

.

Proposition: Determinant of a Triangular Matrix.

The determinant of an upper or upper triangular matrix ![]() is given by its trace, i.e.

is given by its trace, i.e. ![]() .

.

The proposition follows from simply iterating on what we have done in the example above for a general matrix: consider the upper triangular matrix

![Rendered by QuickLaTeX.com \[A = \begin{pmatrix} a_{11} & a_{12} & \cdots & a_{1n}\\ 0&a_{22}&\cdots & a_{2n}\\ \vdots & \ddots &\ddots &\vdots\\ 0&\cdots&0&a_{nn}\end{pmatrix}.\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-519a5e6ba7cc38c47cf525894b278710_l3.png)

Expanding iteratively by the first column, it follows that

![Rendered by QuickLaTeX.com \[\begin{split}\det(A) &= a_{11} \det \begin{pmatrix} a_{22} & a_{23} & \cdots & a_{2n}\\ 0&a_{32}&\cdots & a_{3n}\\ \vdots & \ddots &\ddots &\vdots\\ 0&\cdots&0&a_{nn}\end{pmatrix} = a_{11} a_{22} \det \begin{pmatrix} a_{33} & a_{34} & \cdots & a_{3n}\\ 0&a_{43}&\cdots & a_{4n}\\ \vdots & \ddots &\ddots &\vdots\\ 0&\cdots&0&a_{nn}\end{pmatrix} \\&= \ldots = \Pi_{i=1}^na_{ii} = \text{tr}(A).\end{split}\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-6689b26acf55b0584cca0e9f8bfbb1ed_l3.png)

For the lower-triangular matrix, the procedure would be analogous, here, we just have to expand for the first row instead of column iteratively.

The two key take-aways are that when you have to compute the determinant of big matrices by hand, look for rows and/or columns with many zeros to apply the Laplace-expansion, or if you’re lucky and face a lower or upper triangular matrix, you can directly compute the determinant from the diagonal (this is of course especially true for diagonal matrices, since they are both upper and upper triangular!). The latter fact is nice especially because the elementary matrix operations introduced above affect the determinant in a tractable way, so that it is possible to avoid Laplace altogether:

Theorem: Determinant and Elementary Operations.

Let ![]() and

and ![]() the resulting matrix for the respective elementary operation. Then,

the resulting matrix for the respective elementary operation. Then,

Another important fact that will be helpful frequently is the following:

Theorem: Determinant of the Product.

Let ![]() . Then,

. Then, ![]() .

.

Note that in contrast to the product, for the sum, it does not hold in general that ![]() .

.

Now that we now how to compute a determinant, we care about its role in existence and determination of inverse matrices. As already stated above, the rule we will rely on is inherently simple:

Theorem: Determinant and Invertibility.

Let ![]() . Then,

. Then, ![]() is invertible if and only if

is invertible if and only if ![]() .

.

The “only if” part is rather simple: suppose that ![]() is invertible. Note that

is invertible. Note that ![]() (cf. Proposition “Determinant of a Triangular Matrix”). Then,

(cf. Proposition “Determinant of a Triangular Matrix”). Then,

![]()

Therefore, ![]() . Moreover, this equation immediately establishes the following corollary:

. Moreover, this equation immediately establishes the following corollary:

Corollary: Determinant of the Inverse Matrix.

Let ![]() and suppose that

and suppose that ![]() is invertible. Then,

is invertible. Then, ![]() .

.

The key take-away here is that invertibility is equivalent to a non-zero determinant. Consequently, because invertibility implies unique existence of the solution, so does a non-zero determinant. While the general determinant concept is a little notation-intensive, computation is easy for smaller matrices. Thus, the determinant criterion represents the most common invertibility check for “small” matrices or respectively, the most common unique solution check for “small” systems of linear equations when we have as many unknowns as equations.

As the take-away on how to compute determinants, we can summarize the following:

Clearly, we don’t always find ourselves in the comfortable situation that we have as many equations as unknowns. To determine whether these systems have a unique solution, we need to understand the rank of a matrix. The rank of a matrix links closely to our discussion of linear dependence in Chapter 1.

To limit the formal complexity of the discussion, in contrast to the companion script, we still focus on square systems here. This will allow us to talk about the role the rank of a matrix plays for it’s invertibility. In an excursion into non-square systems at the end of this section, yyou will learn what the discussion below implies for solving non-square systems.

Let’s again consider the equation system in matrix notation, ![]() , and assume that

, and assume that ![]() is square. When writing

is square. When writing ![]() in column notation as

in column notation as ![]() ,

, ![]() for all

for all ![]() , it is straightforward to check that

, it is straightforward to check that ![]() (try to verify it for the

(try to verify it for the ![]() case).

case).

Thus, the LHS of the system is nothing but a linear combination of the columns of ![]() with coefficients

with coefficients ![]() ! Therefore, the problem of solving the system of linear equations can be rephrased as as looking for the linear combination coefficients for the columns of our coefficient matrix

! Therefore, the problem of solving the system of linear equations can be rephrased as as looking for the linear combination coefficients for the columns of our coefficient matrix ![]() that yields the vector

that yields the vector ![]() ! Let us illustrate this abstract characterization with an example.

! Let us illustrate this abstract characterization with an example.

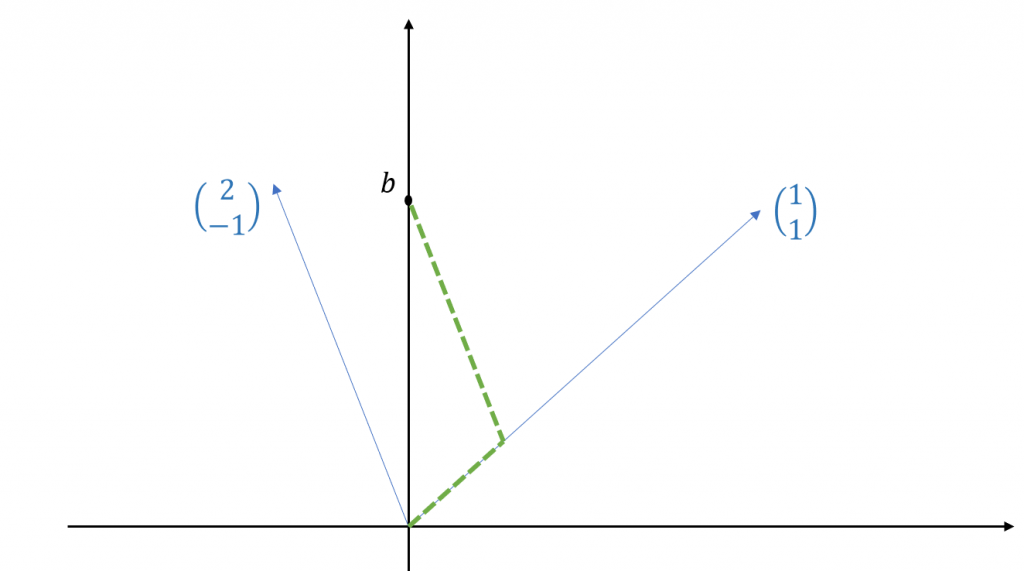

Consider the following system:

![]()

with associated matrix form

![]()

Recall that the linear combination coefficients can be viewed as combination magnitudes (or weights) of the vectors ![]() . Thus, less formally, we are looking for the distance we need to go in every direction indicated by a column of

. Thus, less formally, we are looking for the distance we need to go in every direction indicated by a column of ![]() to arrive at the point

to arrive at the point ![]() . Geometrically, you can imagine the problem like this:

. Geometrically, you can imagine the problem like this:

Feel encouraged to repeat this graphical exercise for other points ![]() ; you should always arrive at a unique solution for the linear combination coefficients. The fundamental reason is that the columns of

; you should always arrive at a unique solution for the linear combination coefficients. The fundamental reason is that the columns of ![]() ,

, ![]() and

and ![]() are linearly independent. Think a second about what it would mean geometrically if they were linearly dependent before continuing.

are linearly independent. Think a second about what it would mean geometrically if they were linearly dependent before continuing.

Done? Good! In case of linear dependence, the points lie on the same line through the origin, and either, ![]() does not lie on this line and we never arrive there, i.e. there are no solutions, or it does, and an infinite number of combinations of the vectors can be used to arrive at

does not lie on this line and we never arrive there, i.e. there are no solutions, or it does, and an infinite number of combinations of the vectors can be used to arrive at ![]() . Either way, there is no unique solution to the system.

. Either way, there is no unique solution to the system.

Now, it is time to formalize the idea of the “number of directions” that are reached by a matrix and whether “a point ![]() can be reached combining the columns of

can be reached combining the columns of ![]() “.

“.

Definition: Column Space of a Matrix.

Let ![]() with columns

with columns ![]() , i.e.

, i.e. ![]() . Then, the column space Co

. Then, the column space Co![]() of

of ![]() is the space “spanned by” these columns, i.e.

is the space “spanned by” these columns, i.e.

![Rendered by QuickLaTeX.com \[Co(A) = \{x\in\mathbb R^m: (\exists \lambda_1,\ldots,\lambda_m: x = \sum_{j=1}^m \lambda_j a_j)\}.\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-cd3c80c5fe5c337397180d0000bd1d3f_l3.png)

Analogously, we define the row space as the space spanned by the rows of ![]() . As a technical detail, note that we called the column space a “space”, and recall from chapter 1 that formally, this means that we need to think about the basis operations that we use. Here, we assume that as a space of real-valued vectors, we use the same operations as for the

. As a technical detail, note that we called the column space a “space”, and recall from chapter 1 that formally, this means that we need to think about the basis operations that we use. Here, we assume that as a space of real-valued vectors, we use the same operations as for the ![]() .

.

Definition: Rank of a Matrix.

Let ![]() . Then, the column (row) rank of

. Then, the column (row) rank of ![]() is the dimension of the column (row) space of

is the dimension of the column (row) space of ![]() . It coincides with the number of linearly independent columns (rows) of

. It coincides with the number of linearly independent columns (rows) of ![]() and is denoted as rk

and is denoted as rk![]() , rank

, rank![]() or rk

or rk ![]() .

.

You may wonder why we use the same notation for the column and row rank, respectively. This is due to the following fact:

Theorem: Column Rank = Row Rank.

Let ![]() . Then, the column rank and the row rank of

. Then, the column rank and the row rank of ![]() coincide.

coincide.

Thus, “the rank” of ![]() , rk

, rk![]() is a well-defined object, and it does not matter whether we compute it from the columns or rows.

is a well-defined object, and it does not matter whether we compute it from the columns or rows.

Definition: Full Rank.

Consider a matrix ![]() . Then,

. Then, ![]() has full row rank if rk

has full row rank if rk![]() and

and ![]() has full column rank if rk

has full column rank if rk![]() . If the matrix is square,

. If the matrix is square, ![]() has full rank if rk

has full rank if rk![]() .

.

Since column and row rank and at most ![]() rows and

rows and ![]() columns can be linearly independent, we get the following result:

columns can be linearly independent, we get the following result:

Corollary: A Bound for the Rank.

Let ![]() . Then, rk

. Then, rk![]() .

.

From the definitions above, you should be able to observe that the rank captures the number of directions into which we can move using the columns of ![]() , and that the column space is the set of all points we can reach using linear combinations of

, and that the column space is the set of all points we can reach using linear combinations of ![]() ‘s columns. Consequently, a solution exists if and only if

‘s columns. Consequently, a solution exists if and only if ![]() , and it is unique, i.e. there is at most one way to “reach” it, if and only if

, and it is unique, i.e. there is at most one way to “reach” it, if and only if ![]() does not have more columns than

does not have more columns than ![]() , that is, if every column captures an independent direction not reached by the remaining columns. In the case of a square

, that is, if every column captures an independent direction not reached by the remaining columns. In the case of a square ![]() system, this reduces to

system, this reduces to ![]() .

.

Let us rephrase this: if (and only if) ![]() , then (i) every column captures an independent direction which ensures that there is no multitude of solutions, and (ii) the matrix

, then (i) every column captures an independent direction which ensures that there is no multitude of solutions, and (ii) the matrix ![]() reaches

reaches ![]() different directions, that is, it allows extension alongside every direction in the

different directions, that is, it allows extension alongside every direction in the ![]() , so that every point

, so that every point ![]() can be reached:

can be reached: ![]() ! To summarize:

! To summarize:

Theorem: Rank Condition for Square Systems.

Let ![]() and

and ![]() . Then,

. Then, ![]() is invertible or respectively, the system

is invertible or respectively, the system ![]() has a unique solution if and only if

has a unique solution if and only if ![]() has full rank, i.e.

has full rank, i.e. ![]() .

.

Above, we had seen that inverting a matrix can be done by bringing it to identity form using elementary operations. Note that this means that any intermediate matrix that we get in the process of applying the elementary operations is invertible as well, since it can also be brought to identity form using only elementary operations. This means that for an invertible matrix ![]() with full rank

with full rank ![]() , all intermediate matrices of the inversion procedure must also have full rank! This is indeed true:

, all intermediate matrices of the inversion procedure must also have full rank! This is indeed true:

Theorem: Rank and Elementary Operations.

Let ![]() . Then, rk

. Then, rk![]() is invariant to elementary operations, i.e. for any

is invariant to elementary operations, i.e. for any ![]() associated with operations (E1) to (E3), rk

associated with operations (E1) to (E3), rk![]() rk

rk![]() .

.

To test your understanding, think about the following question: Consider an upper triangular matrix

![Rendered by QuickLaTeX.com \[A = \begin{pmatrix} a_{11} & a_{12} & \cdots & a_{1n} \\ 0 & a_{22} & \ddots & \vdots \\ \vdots & \ddots & \ddots & a_{n-1,n} \\ 0 & \cdots & 0 & a_{nn} \end{pmatrix}.\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-8b27b0015e564d64ce2fd05f4e72c4c8_l3.png)

When are the columns of ![]() linearly independent (think of the linear independence test, and go over the rows backwards)? What does this mean for the determinant?

linearly independent (think of the linear independence test, and go over the rows backwards)? What does this mean for the determinant?

As already stated above, this course restricts attention to square systems. Of course, in applications, nothing guarantees that we will always work with such systems. This excursion is concerned with the intuition of non-square systems, without going into any formal, mathematical details.

Above, we had just seen that unique solutions exist in “square systems” with an invertible (square) matrix ![]() . Indeed, it turns out that more generally, unless the system can be “reduced” to a square system with invertible matrix

. Indeed, it turns out that more generally, unless the system can be “reduced” to a square system with invertible matrix ![]() , there can be no unique solution! This follows from isolated consideration of the multiple cases of general equation systems. Here, it is helpful to think of the number of rows of the matrix

, there can be no unique solution! This follows from isolated consideration of the multiple cases of general equation systems. Here, it is helpful to think of the number of rows of the matrix ![]() in

in ![]() as the “information content” of the equation system, that is, the number of pieces of information that we have for the vector

as the “information content” of the equation system, that is, the number of pieces of information that we have for the vector ![]() .

.

First, if we have less equations than unknowns (an “under-identified” system, ![]() ), there are always more columns than dimensions, so that it remains ambiguous which columns to combine to arrive at

), there are always more columns than dimensions, so that it remains ambiguous which columns to combine to arrive at ![]() . We can think about this as that the system can never have enough information to uniquely pin down the solution, as we would require at least

. We can think about this as that the system can never have enough information to uniquely pin down the solution, as we would require at least ![]() restrictions on the value

restrictions on the value ![]() should take, one for every entry, but we only have

should take, one for every entry, but we only have ![]() . Still, if multiple columns are linearly dependent, it can be the case that

. Still, if multiple columns are linearly dependent, it can be the case that ![]() , and certain vectors

, and certain vectors ![]() may not be reached using the columns of

may not be reached using the columns of ![]() , such that there might be no solution at all.

, such that there might be no solution at all.

Conversely, with more equations than unknowns (an “over-identified” system, ![]() ), it can never be that

), it can never be that ![]() as the

as the ![]() columns of

columns of ![]() can reach at most

can reach at most ![]() independent directions of the

independent directions of the ![]() . This means that some rows contain either contradictory or redundant information given the statements in the other rows. Roughly, the

. This means that some rows contain either contradictory or redundant information given the statements in the other rows. Roughly, the ![]() -th row

-th row ![]() of

of ![]() is contradictory if the corresponding entry in

is contradictory if the corresponding entry in ![]() ,

, ![]() , can not be reached through choosing

, can not be reached through choosing ![]() in a way consistent with the other rows, and it is redundant if

in a way consistent with the other rows, and it is redundant if ![]() is already implied by the restrictions imposed through the other rows (i.e., the other equations). Redundant information can be left out of the system without changing the result, but reducing the row dimension by 1. To see conflicting and redundant information in very simplistic examples, consider the following two equation systems:

is already implied by the restrictions imposed through the other rows (i.e., the other equations). Redundant information can be left out of the system without changing the result, but reducing the row dimension by 1. To see conflicting and redundant information in very simplistic examples, consider the following two equation systems:

![Rendered by QuickLaTeX.com \[\begin{matrix} 4 x_1 & + & 2 x_2 &=& 6\\ x_1 & - & x_2 &=& 0\\ x_1 & + & x_2 &=& 4 \end{matrix} \hspace{0.5cm}\text{and}\hspace{0.5cm} \begin{matrix} 4 x_1 & + & 2 x_2 &=& 6\\ x_1 & - & x_2 &=& 0\\ x_1 & + & x_2 &=& 2 \end{matrix} \]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-990491a11e277d8a8db124efccf837d7_l3.png)

In both systems, we know per the second equation that ![]() . In the first, we get

. In the first, we get ![]() and

and ![]() from the first and third equations, respectively, an information conflict. In the second, equations 1 and 3 give

from the first and third equations, respectively, an information conflict. In the second, equations 1 and 3 give ![]() and

and ![]() , one of which is redundant because the other is already sufficient to conclude that

, one of which is redundant because the other is already sufficient to conclude that ![]() .

.

A unique solution may potentially be obtained if (i) there is no conflicting information and (ii) we can eliminate enough redundant information to arrive at an equivalent square system. For this system, we can perform our usual determinant check.

The concepts of eigenvalues and -vectors and matrix definiteness have a purpose far beyond the context of invertibility, and presumably, you will come across them frequently throughout your master studies. However, as they don’t really belong to any of the overarching topics discussed in this course, and since they are also quite handy to check for invertability in square systems, their introduction is placed here. Before getting started, as with the determinant, make sure to keep in mind that only square matrices have eigenvalues and definiteness! Thus, unlike the rank, the associated invertibility criteria do not generalize to non-square systems!

Definition: Eigenvectors and -values.

Consider a square matrix ![]() . Then,

. Then, ![]() is said to be an eigenvector of

is said to be an eigenvector of ![]() if there exists a

if there exists a ![]() such that

such that ![]() .

. ![]() is then called an eigenvalue of

is then called an eigenvalue of ![]() .

.

To practice again some quantifier notation, you can try to write down the definition of an eigenvalue: We call ![]() an eigenvalue of

an eigenvalue of ![]() if

if

Let’s think about intuitively what it means if ![]() : clearly,

: clearly, ![]() and

and ![]() are linearly dependent:

are linearly dependent: ![]() , and thus,

, and thus, ![]() and

and ![]() lie on the same line through the origin! Note that if

lie on the same line through the origin! Note that if ![]() , then trivially, for any

, then trivially, for any ![]() it holds that

it holds that ![]() , so that any

, so that any ![]() would constitute an eigenvalue and make the definition meaningless. This is why we require that

would constitute an eigenvalue and make the definition meaningless. This is why we require that ![]() . On the other hand,

. On the other hand, ![]() can indeed be an eigenvalue, namely if there exists

can indeed be an eigenvalue, namely if there exists ![]() so that

so that ![]() . Then, geometrically,

. Then, geometrically, ![]() is indeed equal to the origin.

is indeed equal to the origin.

The following focuses on the answers to two questions: (i) how can we find eigenvalues (and associated eigenvectors)? and (ii) what do the eigenvalues tell us about invertibility of the matrix?

Starting with (i), we can re-write the search for an eigenvector ![]() of

of ![]() for an eigenvalue candidate

for an eigenvalue candidate ![]() as a special system of linear equations: If

as a special system of linear equations: If ![]() is an eigenvector of

is an eigenvector of ![]() for

for ![]() ,

,

![]()

Thus, we have a square system of equations ![]() with coefficient matrix

with coefficient matrix ![]() and solution vector

and solution vector ![]() . Now, how does this help? Note that if there is an eigenvector

. Now, how does this help? Note that if there is an eigenvector ![]() of

of ![]() , it is not unique: if

, it is not unique: if ![]() , for any

, for any ![]() ,

, ![]() , and

, and ![]() is also an eigenvector of

is also an eigenvector of ![]() associated with

associated with ![]() ! Thus, we are looking precisely for the situation where the square system does not have a unique solution, i.e. where

! Thus, we are looking precisely for the situation where the square system does not have a unique solution, i.e. where ![]() and

and ![]() is not invertible! This suggests that we can find the eigenvalues of

is not invertible! This suggests that we can find the eigenvalues of ![]() by solving

by solving

![]()

i.e. by setting the characteristic polynomial of ![]() to zero, or respectively, by finding its roots. You may already have seen applications of this method in different contexts.

to zero, or respectively, by finding its roots. You may already have seen applications of this method in different contexts.

Let us consider an example here to facilitate understanding. Let ![]() . Then,

. Then,

![Rendered by QuickLaTeX.com \[\begin{split} P(\lambda) & = \det(A-\lambda I) = \det\left (\begin{pmatrix} 3 & 2\\ 1 & 2\end{pmatrix} - \begin{pmatrix} \lambda & 0\\ 0 & \lambda\end{pmatrix}\right ) = \det\begin{pmatrix} 3-\lambda & 2\\ 1 & 2-\lambda\end{pmatrix}\\ &= (3-\lambda)(2-\lambda) - 2\cdot 1 = 6 - 2\lambda - 3\lambda + \lambda^2 - 2 = \lambda^2 - 5\lambda + 4. \end{split} \]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-f6951df17e0cbc2cf151e36ec08cc70c_l3.png)

Solving ![]() can be done with the p-q-formula:

can be done with the p-q-formula: ![]() is equivalent to

is equivalent to

![Rendered by QuickLaTeX.com \[\lambda\in\left \{-\frac{-5}{2}\pm \sqrt{\left (\frac{-5}{2}\right )^2 - 4}\right \} = \left \{\frac{5}{2}\pm \sqrt{\frac{25-16}{4}}\right \} = \left \{\frac{5}{2}\pm \frac{3}{2}\right \} = \{1,4\}.\]](https://e600.uni-mannheim.de/wp-content/ql-cache/quicklatex.com-6a1e6f05086fe271d72378df4b587e7b_l3.png)

Consequently, our eigenvalue candidates are ![]() and

and ![]() . To find the eigenvectors, we have to solve the equation system: for

. To find the eigenvectors, we have to solve the equation system: for ![]() ,

, ![]() . Clearly, you can see that this matrix does not have full rank and thus a multitude of solutions.

. Clearly, you can see that this matrix does not have full rank and thus a multitude of solutions. ![]() is equivalent to

is equivalent to ![]() or respectively,

or respectively, ![]() . Thus, the eigenvectors of

. Thus, the eigenvectors of ![]() are multiples of

are multiples of ![]() . The set of all these vectors is

. The set of all these vectors is ![]() is the so-called eigenspace of

is the so-called eigenspace of ![]() . For

. For ![]() , the eigenvectors are multiples of

, the eigenvectors are multiples of ![]() and the eigenspace of

and the eigenspace of ![]() is

is ![]() . Note that an eigenvalue may occur “more than once” and generally be associated with multiple linearly independent eigenvectors. In such a case, we still define the eigenspace as the set of linear combinations of all linearly independent eigenvectors associated with the eigenvalue.

. Note that an eigenvalue may occur “more than once” and generally be associated with multiple linearly independent eigenvectors. In such a case, we still define the eigenspace as the set of linear combinations of all linearly independent eigenvectors associated with the eigenvalue.

To the second question, how do eigenvalues help in determining invertibility? This is very simple:

Proposition: Eigenvalues and Invertibility.

Let ![]() . Then,

. Then, ![]() is invertible if and only if all eigenvalues of

is invertible if and only if all eigenvalues of ![]() are non-zero.

are non-zero.

The simple reason for this relationship is that ![]() is invertible if and only if

is invertible if and only if

![]()

which is the case if and only if ![]() is not an eigenvalue of

is not an eigenvalue of ![]() .

.

The practical value is that sometimes, you may have already computed the eigenvalues of a matrix before investigating its invertibility. Then, this proposition can help you avoid the additional step of computing the determinant.

Now, coming to the last concept: definiteness. Let’s first look at the definition:

Definition: Definiteness of a Matrix.

A symmetric square matrix ![]() is called

is called

Otherwise, it is called indefinite.

Note that the concept not only applies only to square matrices, but also requires them to be symmetric! Further, we exclude the zero vector from definiteness because ![]() for all matrices

for all matrices ![]() . The concept’s relation to invertibility is the given through the following characterization:

. The concept’s relation to invertibility is the given through the following characterization:

Proposition: Definiteness and Eigenvalues.

A symmetric square matrix ![]() is

is

To see this relationship intuitively, note that for any eigenvalue ![]() with eigenvector

with eigenvector ![]() , it holds that

, it holds that

![]()

where ![]() is the Euclidean norm (recall the sum-of-squares property of the scalar product of a vector with itself). Since the norm is non-negative, the sign of

is the Euclidean norm (recall the sum-of-squares property of the scalar product of a vector with itself). Since the norm is non-negative, the sign of ![]() corresponds to the sign of

corresponds to the sign of ![]() .

.

Thus, with the two previous Propositions, the following corollary emerges:

Corollary: Definiteness and Invertibility.

If ![]() is symmetric and positive definite or negative definite, it is invertible.

is symmetric and positive definite or negative definite, it is invertible.

This follows because positive and negative definiteness rule out zero eigenvalues. Thus, positive and negative definiteness are sufficient conditions for invertibility!

Definiteness is a useful criterion especially for matrices of the form ![]() with a general matrix

with a general matrix ![]() of dimension

of dimension ![]() . The form

. The form ![]() ensures that the matrix

ensures that the matrix ![]() is both square and symmetric, and hence, its definiteness is defined. Further, it is at least positive semi-definite, as for any

is both square and symmetric, and hence, its definiteness is defined. Further, it is at least positive semi-definite, as for any ![]() ,

,

![]()

To achieve positive definiteness, it must be ruled out that ![]() , as otherwise, the sum of squares is always strictly positive. Suppose that

, as otherwise, the sum of squares is always strictly positive. Suppose that ![]() . Then, what does

. Then, what does ![]() mean? We can re-write

mean? We can re-write ![]() in column notation. Then, it is easily verified that

in column notation. Then, it is easily verified that ![]() . Thus, if

. Thus, if ![]() for

for ![]() , there exists a linear combination of the columns of

, there exists a linear combination of the columns of ![]() with non-zero coefficients that is equal to zero. Thus, some columns of

with non-zero coefficients that is equal to zero. Thus, some columns of ![]() must be linearly dependent, i.e.

must be linearly dependent, i.e. ![]() .

.

Therefore, any matrix ![]() where

where ![]() has full column rank is invertible by the definiteness criterion. This comes in handy for instance in the context of the OLS-estimator that we had already briefly considered above,

has full column rank is invertible by the definiteness criterion. This comes in handy for instance in the context of the OLS-estimator that we had already briefly considered above, ![]() . Indeed, in the OLS context, we make a specific “no-multi-collinearity” assumption that ensures

. Indeed, in the OLS context, we make a specific “no-multi-collinearity” assumption that ensures ![]() .

.

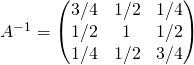

After the extensive discussion of invertibility above, let’s finally discuss how, if we have established invertibility, we can actually compute the inverse matrix. Indeed, if you managed to follow what we did thus far, you actually know already how this works: we can apply the same elementary operations that we use to transform an invertible matrix ![]() to the identity matrix to an identity matrix and arrive at the inverse.

to the identity matrix to an identity matrix and arrive at the inverse.

Before turning to an example on how this works, we need to briefly worry about whether this always works! Thus far, we only know that when we can apply the suggested procedure, then we have found the inverse, but it remains to establish formally that it also holds that whenever there exists an inverse, the suggested procedure will identify it. This is indeed true:

Theorem: Gauß-Jordan Algorithm Validity.

Suppose that ![]() is an invertible matrix. Then, we can apply elementary operations

is an invertible matrix. Then, we can apply elementary operations ![]() in ascending order of the index to

in ascending order of the index to ![]() to arrive at the identity matrix

to arrive at the identity matrix ![]() , and the inverse can be determined as

, and the inverse can be determined as ![]() .

.